Selecting effective means to any end

How are psychographic personalization and persuasion profiling different from more familiar forms of personalization and recommendation systems? A big difference is that they focus on selecting the “how” or the “means” of inducing you to an action — rather than selecting the “what” or the “ends”. Given the recent interest in this kind of personalization, I wanted to highlight some excerpts from something Maurits Kaptein and I wrote in 2010.1

This post excerpts our 2010 article, a version of which was published as:

Kaptein, M., & Eckles, D. (2010). Selecting effective means to any end: Futures and ethics of persuasion profiling. In International Conference on Persuasive Technology (pp. 82-93). Springer Lecture Notes in Computer Science.

For more on this topic, see these papers.

We distinguish between those adaptive persuasive technologies that adapt the particular ends they try to bring about and those that adapt their means to some end.

First, there are systems that use models of individual users to select particular ends that are instantiations of more general target behaviors. If the more general target behavior is book buying, then such a system may select which specific books to present.

Second, adaptive persuasive technologies that change their means adapt the persuasive strategy that is used — independent of the end goal. One could offer the same book and for some people show the message that the book is recommended by experts, while for others emphasizing that the book is almost out of stock. Both messages be may true, but the effect of each differs between users.

Example 2. Ends adaptation in recommender systems

Pandora is a popular music service that tries to engage music listeners and persuade them into spending more time on the site and, ultimately, subscribe. For both goals it is beneficial for Pandora if users enjoy the music that is presented to them by achieving a match between the music offering to individual, potentially latent music preferences. In doing so, Pandora adaptively selects the end — the actual song that is listened to and that could be purchased, rather than the means — the reasons presented for the selection of one specific song.

The distinction between end-adaptive persuasive technologies and means-adaptive persuasive technologies is important to discuss since adaptation in the latter case could be domain independent. In end adaptation, we can expect that little of the knowledge of the user that is gained by the system can be used in other domains (e.g. book preferences are likely minimally related to optimally specifying goals in a mobile exercise coach). Means adaptation is potentially quite the opposite. If an agent expects that a person is more responsive to authority claims than to other influence strategies in one domain, it may well be that authority claims are also more effective for that user than other strategies in a different domain. While we focus on novel means-adaptive systems, it is actually quite common for human influence agents adaptively select their means.

Influence Strategies and Implementations

Means-adaptive systems select different means by which to bring about some attitude or behavior change. The distinction between adapting means and ends is an abstract and heuristic one, so it will be helpful to describe one particular way to think about means in persuasive technologies. One way to individuate means of attitude and behavior change is to identify distinct influence strategies, each of which can have many implementations. Investigators studying persuasion and compliance-gaining have varied in how they individuate influence strategies: Cialdini [5] elaborates on six strategies at length, Fogg [8] describes 40 strategies under a more general definition of persuasion, and others have listed over 100 [16].

Despite this variation in their individuation, influence strategies are a useful level of analysis that helps to group and distinguish specific influence tactics. In the context of means adaptation, human and computer persuaders can select influence strategies they expect to be more effective that other influence strategies. In particular, the effectiveness of a strategy can vary with attitude and behavior change goals. Different influence strategies are most effective in different stages of the attitude to behavior continuum [1]. These range from use of heuristics in the attitude stage to use of conditioning when a behavioral change has been established and needs to be maintained [11]. Fogg [10] further illustrates this complexity and the importance of considering variation in target behaviors by presenting a two-dimensional matrix of 35 classes behavior change that vary by (1) the schedule of change (e.g., one time, on cue) and (2) the type of change (e.g., perform new behavior vs. familiar behavior). So even for persuasive technologies that do not adapt to individuals, selecting an influence strategy — the means — is important. We additionally contend that influence strategies are also a useful way to represent individual differences [9] — differences which may be large enough that strategies that are effective on average have negative effects for some people.

Example 4. Backfiring of influence strategies

John just subscribed to a digital workout coaching service. This system measures his activity using an accelerometer and provides John feedback through a Web site. This feedback is accompanied by recommendations from a general practitioner to modify his workout regime. John has all through his life been known as authority averse and dislikes the top-down recommendation style used. After three weeks using the service, John’s exercise levels have decreased.

Persuasion Profiles

When systems represent individual differences as variation in responses to influence strategies — and adapt to these differences, they are engaging in persuasion profiling. Persuasion profiles are thus collections of expected effects of different influence strategies for a specific individual. Hence, an individual’s persuasion profile indicates which influence strategies — one way of individuating means of attitude and behavior change — are expected to be most effective.

Persuasion profiles can be based on demographic, personality, and behavioral data. Relying primarily on behavioral data has recently become a realistic option for interactive technologies, since vast amounts of data about individuals’ behavior in response to attempts at persuasion are currently collected. These data describe how people have responded to presentations of certain products (e.g. e-commerce) or have complied to requests by persuasive technologies (e.g. the DirectLife Activity Monitor [12]).

Existing systems record responses to particular messages — implementations of one or more influence strategies — to aid profiling. For example, Rapleaf uses responses by a users’ friends to particular advertisements to select the message to present to that user [2]. If influence attempts are identified as being implementations of particular strategies, then such systems can “borrow strength” in predicting responses to other implementations of the same strategy or related strategies. Many of these scenarios also involve the collection of personally identifiable information, so persuasion profiles can be associated with individuals across different sessions and services.

Consequences of Means Adaptation

In the remainder of this paper we will focus on the implications of the usage of persuasion profiles in means-adaptive persuasive systems. There are two properties of these systems which make this discussion important:

1. End-independence: Contrary to profiles used by end-adaptive persuasive sys- tems the knowledge gained about people in means-adaptive systems can be used independent from the end goal. Hence, persuasion profiles can be used independent of context and can be exchanged between systems.

2. Undisclosed: While the adaptation in end-adaptive persuasive systems is often most effective when disclosed to the user, this is not necessarily the case in means-adaptive persuasive systems powered by persuasion profiles. Selecting a different influence strategy is likely less salient than changing a target behavior and thus will often not be noticed by users.

Although through the previous examples and the discussion of adaptive persuasive systems these two notions have already been hinted upon, we feel it is important to examine each in more detail.

End-Independence

Means-adaptive persuasive technologies are distinctive in their end-independence: a persuasion profile created in one context can be applied to bringing about other ends in that same context or to behavior or attitude change in a quite different context. This feature of persuasion profiling is best illustrated by contrast with end adaptation.

Any adaptation that selects the particular end (or goal) of a persuasive attempt is inherently context-specific. Though there may be associations between individual differences across context (e.g., between book preferences and political attitudes) these associations are themselves specific to pairs of contexts. On the other hand, persuasion profiles are designed and expected to be independent of particular ends and contexts. For example, we propose that a person’s tendency to comply more to appeals by experts than to those by friends is present both when looking at compliance to a medical regime as well as purchase decisions.

It is important to clarify exactly what is required for end-independence to obtain. If we say that a persuasion profile is end-independent than this does not imply that the effectiveness of influence strategies is constant across all contexts. Consistent with the results reviewed in section 3, we acknowledge that influence strategy effectiveness depends on, e.g., the type of behavior change. That is, we expect that the most effective influence strategy for a system to employ, even given the user’s persuasion profile, would depend on both context and target behavior. Instead, end-independence requires that the difference between the average effect of a strategy for the population and the effect of that strategy for a specific individual is relatively consistent across contexts and ends.

Implications of end-independence.

From end-independence, it follows that persuasion profiles could potentially be created by, and shared with, a number of systems that use and modify these profiles. For example, the profile constructed from observing a user’s online shopping behavior can be of use in increasing compliance in saving energy. Behavioral measures in latter two contexts can contribute to refining the existing profile.2

Not only could persuasion profiles be used across contexts within a single organization, but there is the option of exchanging the persuasion profiles between corporations, governments, other institutions, and individuals. A market for persuasion profiles could develop [9], as currently exists for other data about consumers. Even if a system that implements persuasion profiling does so ethically, once constructed the profiles can be used for ends not anticipated by its designers.

Persuasion profiles are another kind of information about individuals collected by corporations that individuals may or have effective access to. This raises issues of data ownership. Do individuals have access to their complete persuasion profiles or other indicators of the contents of the profiles? Are individuals compensated for this valuable information [14]? If an individual wants to use Amazon’s persuasion profile to jump-start a mobile exercise coach’s adaptation, there may or may not be technical and/or legal mechanisms to obtain and transfer this profile.

Non-disclosure

Means-adaptive persuasive systems are able and likely to not disclose that they are adapting to individuals. This can be contrasted with end adaptation, in which it is often advantageous for the agent to disclose the adaption and potentially easy to detect. For example, when Amazon recommends books for an individual it makes clear that these are personalized recommendations — thus benefiting from effects of apparent personalization and enabling presenting reasons why these books were recommended. In contrast, with means adaptation, not only may the results of the adaptation be less visible to users (e.g. emphasizing either “Pulitzer Prize winning” or “International bestseller”), but disclosure of the adaptation may reduce the target attitude or behavior change.

It is hypothesized that the effectiveness of social influence strategies is, at least partly, caused by automatic processes. According to dual-process models [4], un- der low elaboration message variables manipulated in the selection of influence strategies lead to compliance without much thought. These dual-process models distinguish between central (or systematic) processing, which is characterized by elaboration on and consideration of the merits of presented arguments, and pe- ripheral (or heuristic) processing, which is characterized by responses to cues as- sociated with, but peripheral to the central arguments of, the advocacy through the application of simple, cognitively “cheap”, but fallible rules [13]. Disclosure of means adaptation may increase elaboration on the implementations of the selected influence strategies, decreasing their effectiveness if they operate primarily via heuristic processing. More generally, disclosure of means adaptation is a disclosure of persuasive intent, which can increase elaboration and resistance to persuasion.

Implications of non-disclosure. The fact that persuasion profiles can be obtained and used without disclosing this to users is potentially a cause for concern. Potential reductions in effectiveness upon disclosure incentivize system designs to avoid disclosure of means adaptation.

Non-disclosure of means adaptation may have additional implications when combined with value being placed on the construction of an accurate persuasion profile. This requires some explanation. A simple system engaged in persuasion profiling could select influence strategies and implementations based on which is estimated to have the largest effect in the present case; the model would thus be engaged in passive learning. However, we anticipate that systems will take a more complex approach, employing active learning techniques [e.g., 6]. In active learning the actions selected by the system (e.g., the selection of the influence strategy and its implementation) are chosen not only based on the value of any resulting attitude or behavior change but including the value predicted improvements to the model resulting from observing the individual’s response. Increased precision, generality, or comprehensiveness of a persuasion profile may be valued (a) because the profile will be more effective in the present context or (b) because a more precise profile would be more effective in another context or more valuable in a market for persuasion profiles.

These later cases involve systems taking actions that are estimated to be non-optimal for their apparent goals. For example, a mobile exercise coach could present a message that is not estimated to be the most effective in increasing overall activity level in order to build a more precise, general, or comprehensive persuasion profile. Users of such a system might reasonably expect that it is designed to be effective in coaching them, but it is in fact also selecting actions for other reasons, e.g., selling precise, general, and comprehensive persuasion profiles is part of the company’s business plan. That is, if a system is designed to value constructing a persuasion profile, its behavior may differ substantially from its anticipated core behavior.

[1] Aarts, E.H.L., Markopoulos, P., Ruyter, B.E.R.: The persuasiveness of ambient intelligence. In: Petkovic, M., Jonker, W. (eds.) Security, Privacy and Trust in Modern Data Management. Springer, Heidelberg (2007)

[2] Baker, S.: Learning, and profiting, from online friendships. BusinessWeek 9(22) (May 2009)Selecting Effective Means to Any End 93

[3] Berdichevsky, D., Neunschwander, E.: Toward an ethics of persuasive technology. Commun. ACM 42(5), 51–58 (1999)

[4] Cacioppo, J.T., Petty, R.E., Kao, C.F., Rodriguez, R.: Central and peripheral routes to persuasion: An individual difference perspective. Journal of Personality and Social Psychology 51(5), 1032–1043 (1986)

[5] Cialdini, R.: Influence: Science and Practice. Allyn & Bacon, Boston (2001)

[6] Cohn,D.A., Ghahramani,Z.,Jordan,M.I.:Active learning with statistical models. Journal of Artificial Intelligence Research 4, 129–145 (1996)

[7] Eckles, D.: Redefining persuasion for a mobile world. In: Fogg, B.J., Eckles, D. (eds.) Mobile Persuasion: 20 Perspectives on the Future of Behavior Change. Stanford Captology Media, Stanford (2007)

[8] Fogg, B.J.: Persuasive Technology: Using Computers to Change What We Think and Do. Morgan Kaufmann, San Francisco (2002)

[9] Fogg, B.J.: Protecting consumers in the next tech-ade, U.S. Federal Trade Commission hearing (November 2006), http://www.ftc.gov/bcp/workshops/techade/pdfs/transcript_061107.pdf

[10] Fogg,B.J.:The behavior grid: 35 ways behavior can change. In: Proc. of Persuasive Technology 2009, p. 42. ACM, New York (2009)

[11] Kaptein, M., Aarts, E.H.L., Ruyter, B.E.R., Markopoulos, P.: Persuasion in am- bient intelligence. Journal of Ambient Intelligence and Humanized Computing 1, 43–56 (2009)

[12] Lacroix, J., Saini, P., Goris, A.: Understanding user cognitions to guide the tai- loring of persuasive technology-based physical activity interventions. In: Proc. of Persuasive Technology 2009, vol. 350, p. 9. ACM, New York (2009)

[13] Petty, R.E., Wegener, D.T.: The elaboration likelihood model: Current status and controversies. In: Chaiken, S., Trope, Y. (eds.) Dual-process theories in social psychology, pp. 41–72. Guilford Press, New York (1999)

[14] Prabhaker, P.R.: Who owns the online consumer? Journal of Consumer Market- ing 17, 158–171 (2000)

[15] Rawls, J.: The independence of moral theory. In: Proceedings and Addresses of the American Philosophical Association, vol. 48, pp. 5–22 (1974)

[16] Rhoads, K.: How many influence, persuasion, compliance tactics & strategies are there? (2007), http://www.workingpsychology.com/numbertactics.html

[17] Schafer, J.B., Konstan, J.A., Riedl, J.: E-commerce recommendation applications. Data Mining and Knowledge Discovery 5(1/2), 115–153 (2001)

- We were of course influenced by B.J. Fogg’s previous use of the term ‘persuasion profiling’, including in his comments to the Federal Trade Commission in 2006. [↩]

- This point can also be made in the language of interaction effects in analysis of variance: Persuasion profiles are estimates of person–strategy interaction effects. Thus, the end-independence of persuasion profiles requires not that the two-way strategy– context interaction effect is small, but that the three-way person–strategy–context interaction is small. [↩]

Is thinking about monetization a waste of our best minds?

I just recently watched this talk by Jack Conte, musician, video artist, and cofounder of Patreon:

Jack dives into how rapidly the Internet has disrupted the business of selling reproducible works, such as recorded music, investigative reporting, etc. And how important — and exciting — it is build new ways for the people who create these works to be able to make a living doing so. Of course, Jack has some particular ways of doing that in mind — such as subscriptions and subscription-like patronage of artists, such as via Patreon.

But this also made me think about this much-repeated1 quote from Jeff Hammerbacher (formerly of Facebook, Cloudera, and now doing bioinformatics research):

“The best minds of my generation are thinking about how to make people click ads. That sucks.”

I certainly agree that many other types of research can be very important and impactful, and often more so than working on data infrastructure, machine learning, market design, etc. for advertising. However, Jack Conte’s talk certainly helped make the case for me that monetization of “content” is something that has been disrupted already but needs some of the best minds to figure out new ways for creators of valuable works to make money.

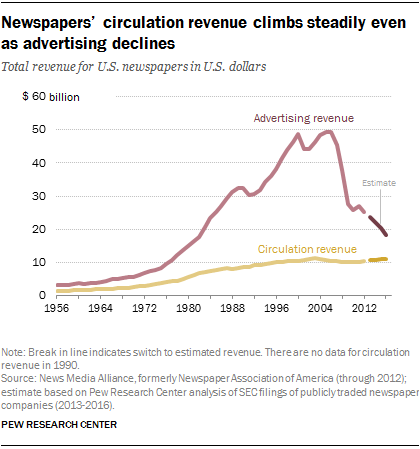

Some of this might be coming up with new arrangements altogether. But it seems like this will continue to occur partially through advertising revenue. Jack highlights how little ad revenue he often saw — even as his videos were getting millions of views. And newspapers’ have been less able to monetize online attention through advertising than they had been able to in print.

Some of this may reflect that advertising dollars were just really poorly allocated before. But improving this situation will require a mix of work on advertising — certainly beyond just getting people to click on ads — such as providing credible measurement of the effects and ROI of advertising, improving targeting of advertising, and more.

Another side of this question is that advertising remains an important part of our culture and force for attitude and behavior change. Certainly looking back on 2016 right now, many people are interested in what effects political advertising had.

So maybe it isn’t so bad if at least some of our best minds are working on online advertising.

- So often repeated that Hammerbacher said to Charlie Rose, “That’s going to be on my tombstone, I think.” [↩]

Interpreting discrete-choice models

Are individuals random-utility maximizers? Or do individuals have private knowledge of shocks to their utility?

“McFadden (1974) observed that the logit, probit, and similar discrete-choice models have two interpretations. The first interpretation is that of individual random utility. A decisionmaker draws a utility function at random to evaluate a choice situation. The distribution of choices then reflects the distribution of utility, which is the object of econometric investigation. The second interpretation is that of a population of decision makers. Each individual in the population has a deterministic utility function. The distribution of choices in the population reflects the population distribution of preferences. … One interpretation of this game theoretic approach is that the econometrician confronts a population of random-utility maximizers whose decisions are coupled. These models extend the notion of Nash equilibrium to random- utility choice. The other interpretation views an individual’s shock as known to the individual but not to others in the population (or to the econometrician). In this interpretation, the Brock-Durlauf model is a Bayes-Nash equilibrium of a game with independent types, where the type of individual i is the pair (x_i, e_i). Information is such that the first component of each player i’s type is common knowledge, while the second is known only to player i.” — Blume, Brock, Durlauf & Ioannides. 2011. Identification of Social Interactions. Handbook of Social Economics, Volume 1B.

Aardvark’s use of Wizard of Oz prototyping to design their social interfaces

The Wall Street Journal’s Venture Capital Dispatch reports on how Aardvark, the social question asking and answering service recently acquired by Google, used a Wizard of Oz prototype to learn about how their service concept would work without building all the tech before knowing if it was any good.

Aardvark employees would get the questions from beta test users and route them to users who were online and would have the answer to the question. This was done to test out the concept before the company spent the time and money to build it, said Damon Horowitz, co-founder of Aardvark, who spoke at Startup Lessons Learned, a conference in San Francisco on Friday.

“If people like this in super crappy form, then this is worth building, because they’ll like it even more,” Horowitz said of their initial idea.

At the same time it was testing a “fake” product powered by humans, the company started building the automated product to replace humans. While it used humans “behind the curtain,” it gained the benefit of learning from all the questions, including how to route the questions and the entire process with users.

This is a really good idea, as I’ve argued before on this blog and in a chapter for developers of mobile health interventions. What better way to (a) learn about how people will use and experience your service and (b) get training data for your machine learning system than to have humans-in-the-loop run the service?

My friend Chris Streeter wondered whether this was all done by Aardvark employees or whether workers on Amazon Mechanical Turk may have also been involved, especially in identifying the expertise of the early users of the service so that the employees could route the questions to the right place. I think this highlights how different parts of a service can draw on human and non-human intelligence in a variety of ways — via a micro-labor market, using skilled employees who will gain hands-on experience with customers, etc.

I also wonder what UIs the humans-in-the-loop used to accomplish this. It’d be great to get a peak. I’d expect that these were certainly rough around the edges, as was the Aardvark customer-facing UI.

Aardvark does a good job of being a quite sociable agent (e.g., when using it via instant messaging) that also gets out of the way of the human–human interaction between question askers and answers. I wonder how the language used by humans to coordinate and hand-off questions may have played into creating a positive para-social interaction with vark.

Not just predicting the present, but the future: Twitter and upcoming movies

Search queries have been used recently to “predict the present“, as Hal Varian has called it. Now some initial use of Twitter chatter to predict the future:

The chatter in Twitter can accurately predict the box-office revenues of upcoming movies weeks before they are released. In fact, Tweets can predict the performance of films better than market-based predictions, such as Hollywood Stock Exchange, which have been the best predictors to date. (Kevin Kelley)

Here is the paper by Asur and Huberman from HP Labs. Also see a similar use of online discussion forums.

But the obvious question from my previous post is, how much improvement do you get by adding more inputs to the model? That is, how does the combined Hollywood Stock Exchange and Twitter chatter model perform? The authors report adding the number of theaters the movie opens in to both models, but not combining them directly.