Aardvark’s use of Wizard of Oz prototyping to design their social interfaces

The Wall Street Journal’s Venture Capital Dispatch reports on how Aardvark, the social question asking and answering service recently acquired by Google, used a Wizard of Oz prototype to learn about how their service concept would work without building all the tech before knowing if it was any good.

Aardvark employees would get the questions from beta test users and route them to users who were online and would have the answer to the question. This was done to test out the concept before the company spent the time and money to build it, said Damon Horowitz, co-founder of Aardvark, who spoke at Startup Lessons Learned, a conference in San Francisco on Friday.

“If people like this in super crappy form, then this is worth building, because they’ll like it even more,” Horowitz said of their initial idea.

At the same time it was testing a “fake” product powered by humans, the company started building the automated product to replace humans. While it used humans “behind the curtain,” it gained the benefit of learning from all the questions, including how to route the questions and the entire process with users.

This is a really good idea, as I’ve argued before on this blog and in a chapter for developers of mobile health interventions. What better way to (a) learn about how people will use and experience your service and (b) get training data for your machine learning system than to have humans-in-the-loop run the service?

My friend Chris Streeter wondered whether this was all done by Aardvark employees or whether workers on Amazon Mechanical Turk may have also been involved, especially in identifying the expertise of the early users of the service so that the employees could route the questions to the right place. I think this highlights how different parts of a service can draw on human and non-human intelligence in a variety of ways — via a micro-labor market, using skilled employees who will gain hands-on experience with customers, etc.

I also wonder what UIs the humans-in-the-loop used to accomplish this. It’d be great to get a peak. I’d expect that these were certainly rough around the edges, as was the Aardvark customer-facing UI.

Aardvark does a good job of being a quite sociable agent (e.g., when using it via instant messaging) that also gets out of the way of the human–human interaction between question askers and answers. I wonder how the language used by humans to coordinate and hand-off questions may have played into creating a positive para-social interaction with vark.

Apple’s “trademarked” chat bubbles: source equivocality in mobile apps and services

TechCrunch and others have been joking about Apple’s rejection of an app because it uses shiny chat bubbles, which the Apple representative claimed were trademarked:

Chess Wars was being rejected after the six week wait [because] the bubbles in its chat rooms are too shiny, and Apple has trademarked that bubbly design. […] The representative said Stump needed to make the bubbles “less shiny” and also helpfully suggested that he make the bubbles square, just to be sure.

One thing that is quite striking in this situation is that it is at odds with Apple’s long history of strongly encouraging third-party developers to follow many UI guidelines — guidelines that when followed make third-party apps blend in like they’re native.1

It’s important to not read too much into this (especially since we don’t know what Apple’s more considered policy on this will end up being), but it is interesting to think about how responsibility gets spread around among mobile applications, services, and devices — and how this may be different than existing models on the desktop.My sense is that experienced desktop computer users understand at least the most important ways sources of their good and bad experiences are distinguished. For example, “locomotion” is a central metaphor in using the Web, as opposed to the conversation and manipulation metaphors of the command line / natural language interfaces and WIMP: we “go to” a site (see this interview with Terry Winograd, full .mov here). The locomotion metaphor helps people distinguish what my computer is contributing and what some distant, third-party “site” is contributing.

This is complex even on the Web, but many of these genre rules are currently being all mixed up. Google has Gmail running in your browser but on your computer. Cameraphones are recognizing objects you point them at — some by analyzing the image on the device and some by sending the device to a server to be analyzed.

This issue is sometimes identified by academics as one of source orientation and source equivocality. Though there has been some great research in this area, there is a lot we don’t know and the field is in flux: people’s beliefs about systems are changing and the important technologies and genres are still emerging.

If there’s one important place to start thinking about the craziness of the current situation of ubiquitous source equivocality is “Personalization of mass media and the growth of pseudo-community” (1987) by James Beniger that predates much of the tech at issue.

- I was led to think this by a commenter on TechCrunch, Dan Grossman, pointing out this long history. [↩]

Self-verification strategies in human–computer interaction

People believe many things about themselves. Having an accurate view of oneself is valuable because it can be used to generate both expectations that will be fulfilled and plans that can be successfully executed. But in being cognitively limited agents, there is pressure for us humans to not only have accurate self-views, but to have efficient ones.

In his new book, How We Get Along, philosopher David Velleman puts it this way:

At one extreme, I have a way of interpreting myself, a way that I want you to interpret me, a way that I think you do interpret me, a way that I think you suspect me of wanting you to interpret me, a way that I think you suspect me of thinking you do interpret me, and so on, each of these interpretations being distinct from all the others, and all of them being somehow crammed into my self-conception. At the other extreme, there is just one interpretation of me, which is common property between us, in that we not only hold it but interpret one another as holding it, and so on. If my goal is understanding, then the latter interpretation is clearly preferable, because it is so much simpler while being equally adequate, fruitful, and so on. (Lecture 3)

That is, one way my self-views can be efficient representations is if they serve double duty as others’ views of me — if my self-views borrow from others’ views of me and if my models of others’ views of me likewise borrow from my self-views.

Sometimes this back and forth between my self-view and my understanding of how others’ view me can seem counter to self-interest. People behave in ways that confirm others’ expectations of them, even when these expectations are negative (Snyder & Swann, 1978, for a review see Snyder & Stukas, 1999). And people interact with other people in ways such that their self-views are not challenged by others’ views of them and their self-views can double as representations of the others’ views of them, even when this means taking other people as having negative views of them (Swann, 1981).

Self-verification and behavioral confirmation strategies

People use multiple different strategies for achieving a match between their self-views and others’ view of them. These strategies come in at different stages of social interaction.

Prior to and in anticipation of interaction, people seek and more thoroughly engage with information and people with self-views expected to be consistent with their self-views. For example, they spend more time reading statements about themselves that they expect to be consistent with their self-views — even if those particular self-views are negative.

During interaction, people behave in ways that elicit views of them from others that are consistent with their self-views. This is especially true when their self-views are being challenged, say because someone expresses a positive view of an aspect of a person who sees that aspect of themselves negatively. People can “go out of their way” to behave in ways that elicit negative self-views. On the other hand, people can change their self-views and their behavior to match the expectations of others; this primarily happens when a person’s view of a particular aspect of themselves is one they do not regard as certain.

After interaction, people better remember expressions of others’ views of them that are consistent with their own. They also can think about others’ views that were inconsistent in ways that construe them as non-conflicting. On the long term, people gravitate to others’ — including friends and spouses — who view them as they view themselves. Likewise, people seem to push away others who have different views of them.

Do people self-verify in interacting with computers?

Given that people engage in this array of self-verification strategies in interactions with other people, we might expect that they would do the same in interacting with computers, including mobile phones, on-screen agents, voices, and services.

One reason to think that people do self-verify in human–computer interaction is that people respond to computers in a myriad of social ways: people reciprocate with computers, take on computers as teammates, treat computer personalities like human personalities, etc. (for a review see Nass & Moon, 2000). So I expect that people use these same strategies when using interactive technologies — including personal computers, mobile phones, robots, cars, online services, etc.

While empirical research should be carried out to test this basic, well-motivated hypothesis, there is further excitement and importance to the broader implications of this idea and its connections to how people understand new technological systems.

When systems models users

Since the 1980s, it has been quite common for system designers to think about the mental models people have of systems — and how these models are shaped by factors both in and out of the designer’s control (Gentner & Stevens, 1983). A familiar goal has been to lead people to a mental model that “matches” a conceptual model developed by the designer and is approximately equivalent to a true system model as far as common inputs and outputs go.

Many interactive systems develop a representation of their users. So in order to have a good mental model of these systems, people must represent how the system views them. This involves many of the same trade-offs considered above.

These considerations point out some potential problems for such systems. Technologists sometimes talk about the ability to provide serendipitous discovery. Quantifying aspects of one’s own life — including social behavior (e.g., Kass, 2007) and health — is a current trend in research, product development, and DIY and self-experimentation. While sometimes this collected data is then analyzed by its subject (e.g., because the subject is a researcher or hacker who just wants to dig into the data), to the extend that this trend will go mainstream, it will require simplification by building and presenting readily understandable models and views of these systems’ users.

The use of self-verification strategies and behavioral confirmation when interacting with computer systems — not only with people — thus presents a challenge to the ability of such systems to find users who are truly open to self-discovery. I think many of these same ideas apply equally to context-aware services on mobile phones and services that models one’s social network (even if they don’t present that model outright).

Social responses or more general confirmation bias

That people may self-verify with computers as well as people raises a further question about both self-verification theory and social responses to communication technologies theory (aka the “Media Equation”). We may wonder just how general these strategies and responses are: are these strategies and responses distinctively social?

Prior work on self-verification has left open the degree to which self-verification strategies are particular to self-views, rather than general to all relatively important and confident beliefs and attitudes. Likewise, it is unclear to what extent all experiences, rather than just social interaction (including reading statements written or selected by another person), that might challenge or confirm a self-view are subject to these self-verification strategies.

Inspired by Velleman’s description above, we can think that it is just that other’s views of us have an dangerous potential to result in an explosion of the complexity of the world we need to model (“I have a way of interpreting myself, a way that I want you to interpret me, a way that I think you do interpret me, a way that I think you suspect me of wanting you to interpret me, a way that I think you suspect me of thinking you do interpret me, and so on”). Thus, if other systems can prompt this same regress, then the same frugality with our cognitions should lead to self-verification and behavioral confirmation. This is a reminder that treating media like real life, including treating computers like people, is not clearly non-adaptive (contra Reeves & Nass, 1996) or maladaptive (contra Lee, 2004).

References

Kass, A. (2007). Transforming the Mobile Phone into a Personal Performance Coach. In B. J. Fogg & D. Eckles (Eds.), Mobile Persuasion: 20 Perspectives on the Future of Behavior Change. Stanford Captology Media.

Lee, K. M. (2004). Why Presence Occurs: Evolutionary Psychology, Media Equation, and Presence. Presence: Teleoperators & Virtual Environments, 13(4), 494-505. doi: 10.1162/1054746041944830.

Nass, C., & Moon, Y. (2000). Machines and Mindlessness: Social Responses to Computers. Journal of Social Issues, 56(1), 81-103.

Reeves, B., & Nass, C. (1996). The Media Equation: How People Treat Computers, Television, and New Media Like Real People and Places. Cambridge University Press.

Snyder, M., & Stukas, A. A. (1999). Interpersonal processes: The interplay of cognitive, motivational, and behavioral activities in social interaction. Annual Review of Psychology, 50(1), 273-303.

Snyder, M., & Swann, W. B. (1978). Behavioral confirmation in social interaction: From social perception to social reality. Journal of Experimental Social Psychology, 14(2), 148-62.

Swann, W. B., & Read, S. J. (1981). Self-verification processes: How we sustain our self-conceptions. Journal of Experimental Social Psychology, 17(4), 351-372. doi: 10.1016/0022-1031(81)90043-3

Velleman, J.D. (2009). How We Get Along. Cambridge University Press. The draft I quote is available from http://ssrn.com/abstract=1008501

Situational variation, attribution, and human-computer relationships

Mobile phones are gateways to our most important and enduring relationships with other people. But, like other communication technologies, the mobile phone is psychologically not only a medium: we also form enduring relationships with devices themselves and their associated software and services (Sundar 2004). While different than relationships with other people, these human–technology relationships are also importantly social relationships. People exhibit a host of automatic, social responses to interactive technologies by applying familiar social rules, categories, and norms that are otherwise used in interacting with people (Reeves and Nass 1996; Nass and Moon 2000).

These human–technology relationships develop and endure over time and through radical changes in the situation. In particular, mobile phones are near-constant companions. They take on roles of both medium for communication with other people and independent interaction partner through dynamic physical, social, and cultural environments and tasks. The global phenomenon of mobile phone use highlights both that relationships with people and technologies are influenced by variable context and that these devices are, in some ways, a constant in amidst these everyday changes.

Situational variation and attribution

Situational variation is important for how people understand and interact with mobile technology. This variation is an input to the processes by which people disentangle the internal (personal or device) and external (situational) causes of an social entity’s behavior (Fiedler et al. 1999; Forsterling 1992; Kelley 1967), so this situational variation contributes to the traits and states attributed to human and technological entities. Furthermore, situational variation influences the relationship and interaction in other ways. For example, we have recently carried out an experiment providing evidence that this situational variation itself (rather than the characteristics of the situations) influences memory, creativity, and self-disclosure to a mobile service; in particular, people disclose more in places they have previously disclosed to the service, than in new places (Sukumaran et al. 2009).

Not only does the situation vary, but mobile technologies are increasingly responsive to the environments they share with their human interactants. A system’s systematic and purposive responsiveness to the environment means means that explaining its behavior is about more than distinguishing internal and external causes: people explain behavior by attributing reasons to the entity, which may trivially either refer to internal or external causes. For example, contrast “Jack bought the house because it was secluded” (external) with “Jack bought the house because he wanted privacy” (internal) (Ross 1977, p. 176). Much research in the social cognition and attribution theory traditions of psychology has failed to address this richness of people’s everyday explanations of other ’s behavior (Malle 2004; McClure 2002), but contemporary, interdisciplinary work is elaborating on theories and methods from philosophy and developmental psychology to this end (e.g., the contributions to Malle et al. 2001).

These two developments — the increasing role of situational variation in human-technology relationships and a new appreciation of the richness of everyday explanations of behavior — are important to consider together in designing new research in human-computer interaction, psychology, and communication. Here are three suggestions about directions to pursue in light of this:

Design systems that provide constancy and support through radical situational changes in both the social and physical environment. For example, we have created a system that uses the voices of participants in an upcoming event as audio primes during transition periods (Sohn et al. 2009). This can help ease the transition from a long corporate meeting to a chat with fellow parents at a child’s soccer game.

Design experimental manipulations and measure based on features of folk psychology — the implicit theory or capabilities by which we attribute, e.g., beliefs, thoughts, and desires (propositional attitudes) to others (Dennett 1987) — identified by philosophers. For example, attributions propositional attitudes (e.g., beliefs) to an entity have the linguistic feature that one cannot substitute different terms that refer to the same object while maintaining the truth or appropriateness of the statement. This opacity in attributions of propositional attitudes is the subject of a large literature (e.g., following Quine 1953), but this has not been used as a lens for much empirical work, except for some developmental psychology (e.g., Apperly and Robinson 2003). Human-computer interaction research should use this opacity (and other underused features of folk psychology) in studies of how people think about systems.

Connect work on mental models of systems (e.g., Kempton 1986; Norman 1988) to theories of social cognition and folk psychology. I think we can expect much larger overlap in the process involved than in the current research literature: people use folk psychology to understand, predict, and explain technological systems — not just other people.

References

Apperly, I. A., & Robinson, E. J. (2003). When can children handle referential opacity? Evidence for systematic variation in 5- and 6-year-old children’s reasoning about beliefs and belief reports. Journal of Experimental Child Psychology, 85(4), 297-311. doi: 10.1016/S0022-0965(03)00099-7.

Dennett, D. C. (1987). The Intentional Stance (p. 388). MIT Press.

Fiedler, K., Walther, E., & Nickel, S. (1999). Covariation-based attribution: On the ability to assess multiple covariates of an effect. Personality and Social Psychology Bulletin, 25(5), 609.

Försterling, F. (1992). The Kelley model as an analysis of variance analogy: How far can it be taken? Journal of Experimental Social Psychology, 28(5), 475-490. doi: 10.1016/0022-1031(92)90042-I.

Kelley, H. H. (1967). Attribution theory in social psychology. In Nebraska Symposium on Motivation (Vol. 15).

Malle, B. F. (2004). How the Mind Explains Behavior: Folk Explanations, Meaning, and Social Interaction. Bradford Books.

Malle, B. F., Moses, L. J., & Baldwin, D. A. (2001). Intentions and Intentionality: Foundations of Social Cognition. MIT Press.

McClure, J. (2002). Goal-Based Explanations of Actions and Outcomes. In M. H. Wolfgang Stroebe (Ed.), European Review of Social Psychology (pp. 201-235). John Wiley & Sons, Inc. Retrieved from http://dx.doi.org/10.1002/0470013478.ch7.

Nass, C., & Moon, Y. (2000). Machines and Mindlessness: Social Responses to Computers. Journal of Social Issues, 56(1), 81-103.

Norman, D. A. (1988). The Psychology of Everyday Things. New York: Basic Books.

Quine, W. V. O. (1953). From a Logical Point of View: Nine Logico-Philosophical Essays. Harvard University Press.

Reeves, B., & Nass, C. (1996). The media equation: how people treat computers, television, and new media like real people and places (p. 305). Cambridge University Press.

Ross, L. (1977). The intuitive psychologist and his shortcomings: Distortions in the attribution process. In L. Berkowitz (Ed.), Advances in Experimental Social Psychology (Vol. 10, pp. 174-221). New York: Academic Press.

Sohn, T., Takayama, L., Eckles, D., & Ballagas, R. (2009). Auditory Priming for Upcoming Events. Forthcoming in CHI ’09 extended abstracts on Human factors in computing systems. Boston, Massachusetts, United States: ACM Press.

Sukumaran, A., Ophir, E., Eckles, D., & Nass, C. I. (2009). Variable Environments in Mobile Interaction Aid Creativity but Impair Learning and Self-disclosure. To be presented at the Association for Psychological Science Convention, San Francisco, California.

Sundar, S. S. (2004). Loyalty to computer terminals: is it anthropomorphism or consistency? Behaviour & Information Technology, 23(2), 107-118.

Transformed social interaction and actively mediated communication

Transformed social interaction (TSI) is modification, filtering, and synthesis of representations of face-to-face communication behavior, identity cues, and sensing in a collaborative virtual environment (CVE): TSI flexibly and strategically decouples representation from behavior. In this post, I want to extend this notion of TSI, as presented in Bailenson et al. (2005), in two general ways. We have begun calling the larger category actively mediated communication.1

First, I want to consider a larger category of strategic mediation in which no communication behavior is changed or added between different existing participants. This includes applying influence strategies to the feedback to the communicator as in coaching (e.g., Kass 2007) and modification of the communicator’s identity as presented to himself (i.e. the transformations of the Proteus effect). This extension entails a kind of unification of TSI with persuasive technology for computer-mediated communication (CMC; Fogg 2002, Oinas-Kukkonen & Harjumaa 2008).

Second, I want to consider a larger category of media in which the same general ideas of TSI can be manifest, albeit in quite different ways. As described by Bailenson et al. (2005), TSI is (at least in exemplars) limited to transformations of representations of the kind of non-verbal behavior, sensing, and identity cues that appear in face-to-face communication, and thus in CVEs. I consider examples from other forms of communication, including active mediation of the content, verbal or non-verbal, of a communication.

Feedback and influence strategies: TSI and persuasive technology

TSI is exemplified by direct transformation that is continuous and dynamic, rather than, e.g., static anonymization or pseudonymization. These transformations are complex means to strategic ends, and they function through a “two-step” programmatic-psychological process. For example, a non-verbal behavior is changed (modified, filtered, replaced), and then the resulting representation affects the end through a psychological process in other participants. Similar ends can be achieved by similar means in the second (psychological) step, without the same kind of direct programmatic change of the represented behavior.

In particular, consider coaching of non-verbal behavior in a CVE, a case already considered as an example of TSI (Bailenson et al. 2005, pp. 434-6), if not a particularly central one. In one case, auxiliary information is used to help someone interact more successfully:

In those interactions, we render the interactants’ names over their heads on floating billboards for the experimenter to read. In this manner the experimenter can refer to people by name more easily. There are many other ways to use these floating billboards to assist interactants, for example, reminders about the interactant’s preferences or personality (e.g., “doesn’t respond well to prolonged mutual gaze”). (Bailenson et al. 2005, pp. 435-436)

While this method can bring about change in non-verbal behaviors as represented in the CVE and thus achieve the same strategic goals by the same means in the second (psychological) step, it does not do so in the characteristic TSI way: it doesn’t decouple the representation from the behavior; instead it changes the behavior itself in the desired way. I think our understanding of the core of TSI is improved by excluding this kind of active mediation (even that presented by Bailenson et al.) and considering it instead a proper part of the superset – actively mediated communication. With this broadened scope we can take advantage of the wider range of strategies, taxonomies, and examples available from the study of persuasive technology.

TSI ideas outside CVEs

TSI is established as applying to CVEs. Standard TSI examples take place in CVEs and the feasibility of TSI is discussed with regard to CVEs. This focus is also manifest in the fact that it is behaviors, identity cues, and sensing that are normally available that are the starting point for transformation. Some of the more radical transformations of sensing and identity are nonetheless explained with reference to real-world manifestation: for example, helpers walk like ghosts amongst those you are persuading, reporting back on what they learn.

But I think this latter focus is just an artifact of the fact that, in a CVE, all the strategic transformations have to be manifest as representations of face-to-face encounters. As evidence for the anticipation of the generalization of TSI ideas beyond CVEs, we see that Bailenson et al. (2005, p. 428) introduce TSI with examples from the kind of outright blocking of any representation of particular non-verbal behaviors in telephone calls. Of course, this is not the kind of dynamic transformation characteristic of TSI, but this highlights how TSI ideas make sense outside of CVEs as well. To make it more clear what I mean by this, I present three examples: transformation of a shared drawing, coaching and augmentation in face-to-face conversation, and aggregation and synthesis in an SNS-based event application, like Facebook Events.

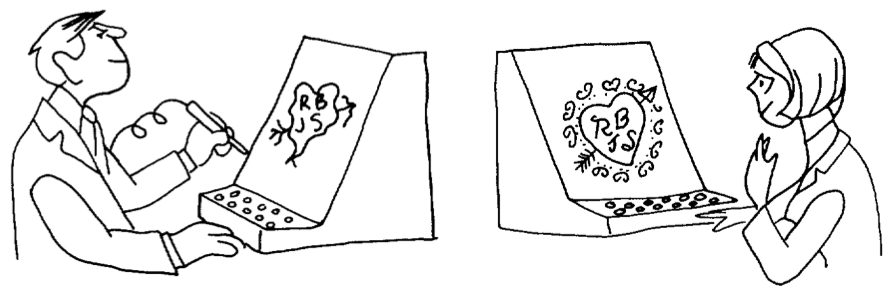

This more general notion of actively mediated communication is present in the literature as early as 1968 with the work of Licklider & Taylor (1968). In one interesting example, which is also a great example of 1960s gender roles, a man is draws an arrow-pierced heart with his initials and the initials of a romantic interest or partner, but when this heart is shared with her (perhaps in real time as he draws it), it is rendered as a beautiful heart with little resemblance to his original, poor sketch. The figure illustrating the example is captioned, “A communication system should make a positive contribution to the discovery and arousal of interests” (Licklider & Taylor 1968, p. 26). This example clearly exemplifies the idea of TSI – decoupling the original behavior from its representation in a strategic way that requires an intelligent process (or human-in-the-loop) making the transformation responsive to the specific circumstances and goals.

Licklider & Taylor also consider examples in which computers take an active role in a face-to-face presentation by adding a shared, persuasive simulation (cf. Fogg 2002 on computers in the functional role of interactive media such as games and simulations). But a clearer example, that also bears more resemblance to characteristic TSI examples, is conversation and interaction coaching via a wireless headset that can determine how much each participant is speaking, for how long, and how often they interrupt each other (Kass 2007). One could even imagine a case with greater similarity to the TSI example considered in the previous case: a device whispers in your ear the known preferences of the person you are talking to face-to-face (e.g., that he doesn’t respond well to prolonged mutual gaze).

Finally, I want to share an example that is a bit farther afield from TSI exemplars, but highlights how ubiquitous this general category is becoming. Facebook includes a social event planning application with which users can create and comment on events, state their plans to attend, and share personal media and information before and after it occurs. Facebook presents relevant information about one’s network in a single “News Feed”. Event related items can appear in this feed, and they feature active mediation: a user can see an item stating that “Jeff, Angela, Rich, and 6 other friends are attending X. It is at 9pm tonight” – but none of these people or the event creator, ever wrote this text. It has been generated strategically: it is encouraging considering coming to the event and it is designed to maximize the user’s sense of relevance of their News Feed. The original content, peripheral behavior, and form of their communications have been aggregated and synthesized into a new communication that better suits the situation than the original.

Source orientation in actively mediated communication

Bailenson et al. (2005) considers the consequences of TSI for trust in CVEs and how possible TSI detection is. I’ve suggested that we can see TSI-like phenomena, both actual and possible, outside of CVEs and outside of a narrow version of TSI in which directly changing (programmatically) the represented behavior without changing the actual behavior is required. Many of the same consequences for trust may apply.

But even when the active mediation is to some degree explicit – participants are aware that some active mediation is going on, though perhaps not exactly what – interesting questions about source orientation still apply. There is substantial evidence that people orient to the proximal rather than distal source in use of computers and other media (Sundar & Nass 2000, Nass & Moon 2000), but this work has been limited to relatively simple situations, rather than the complex multi-sourced, actively mediated communications under discussion. I think we should expect that proximality will not consistently predict degree of source orientation (impact of source characteristics) in these circumstances: the most proximal source may be a dumb terminal/pipe (cf. the poor evidence for proximal source orientation in the case of televisions, Reeves & Nass 1996), or the most proximal source may be an avatar, the second most proximal might be a cyranoid/ractor or a computer process, while the more distant is the person whose visual likeness is similar to that of the avatar; and in these cases one would expect the source orientation to not be the most proximal, but to be the sources that are more phenomenologically present and more available to mind.

This seems like a promising direction for research to me. Most generally, it is part of the study of source orientation in more complex configurations – with multiple devices, multiple sources, and multiple brands and identities. Consider a basic three condition experiment in which participants interact with another person and are either told (1) nothing about any active mediation, (2) there is a computer actively mediating the communications of the other person, (3) there is a human (or perhaps multiple humans) actively mediating the communications of the other person. I am not sure this is the best design, but I think it hints in the direction of the following questions:

- When and how do people apply familiar social cognition strategies (e.g., folk psychology of propositional attitudes) to understanding, explaining, and predicting the behavior of a collection of people (e.g., multiple cyranoids, or workers in a task completion market like Amazon Mechanical Turk)?

- What differences are there in social responses, source orientation, and trust between active mediation that is (ostensibly) carried out by (1) a single human, (2) multiple humans each doing very small pieces, (3) a computer?

References

Eckles, D., Ballagas, R., Takayama, L. (unpublished manuscript). The Design Space of Computer-Mediated Communication: Dimensional Analysis and Actively Mediated Communication.

Fogg, B.J. (2002). Persuasive Technology: Using Computers to Change What We Think and Do. Morgan Kaufmann.

Kass, A. (2007). Transforming the Mobile Phone into a Personal Performance Coach. Mobile Persuasion: 20 Perspectives on the Future of Influence, ed. B.J. Fogg & D. Eckles, Stanford Captology Media.

Licklider, J.C.R., & Taylor, R.W. (1968). The Computer as a Communication Device. Science and Technology, April 1968. Page numbers from version reprinted at http://gatekeeper.dec.com/pub/DEC/SRC/research-reports/abstracts/src-rr-061.html.

Nass, C., and Moon, Y. (2000). Machines and Mindlessness: Social Responses to Computers. Journal of Social Issues, 56(1), 81-103.

Oinas-Kukkonen, H., & Harjumaa, M. (2008). A Systematic Framework for Designing and Evaluating Persuasive Systems. In Proceedings of Persuasive Technology: Third International Conference, Springer, pp. 164-176.

Sundar, S. S., & Nass, C. (2000). Source Orientation in Human-Computer Interaction Programmer, Networker, or Independent Social Actor? Communication Research, 27(6).

- This idea is expanded upon in Eckles, Ballagas, and Takayama (ms.), to be presented at the workshop on Socially Mediating Technologies at CHI 2009. This working paper will be available online soon. [↩]