Public once, public always? Privacy, egosurfing, and the availability heuristic

The Library of Congress has announced that it will be archiving all Twitter posts (tweets). You can find positive reaction on Twitter. But some have also wondered about privacy concerns. Fred Stutzman, for example, points out how even assuming that only unprotected accounts are being archived this can still be problematic.1 While some people have Twitter usernames that easily identify their owners and many allow themselves to be found based on an email address that is publicly associated with their identity, there are also many that do not. If at a future time, this account becomes associated with their identity for a larger audience than they desire, they can make their whole account viewable only by approved followers2, delete the account, or delete some of the tweets. Of course, this information may remain elsewhere on the Internet for a short or long time. But in contrast, the Library of Congress archive will be much more enduring and likely outside of individual users’ control.3 While I think it is worth examining the strategies that people adopt to cope with inflexible or difficult to use privacy controls in software, I don’t intend to do that here.

Instead, I want to relate this discussion to my continued interest in how activity streams and other information consumption interfaces affect their users’ beliefs and behaviors through the availability heuristic. In response to some comments on his first post, Stutzman argues that people overestimate the degree to which content once public on the Internet is public forever:

So why is it that we all assume that the content we share publicly will be around forever? I think this is a classic case of selection on the dependent variable. When we Google ourselves, we are confronted with what’s there as opposed to what’s not there. The stuff that goes away gets forgotten, and we concentrate on things that we see or remember (like a persistent page about us that we don’t like). In reality, our online identities decay, decay being a stochastic process. The internet is actually quite bad at remembering.

This unconsidered “selection on the dependent variable” is one way of thinking about some cases of how the availability heuristic (and use of ease-of-retrievel information more generally). But I actually think the latter is more general and more useful for describing the psychological processes involved. For example, it highlights both that there are many occurrences or interventions can can influence which cases are available to mind and that even if people have thought about cases where their content disappeared at some point, this may not be easily retrieved when making particular privacy decisions or offering opinions on others’ actions.

Stutzman’s example is but one way that the combination of the availability heuristic and existing Internet services combine to affect privacy decisions. For example, consider how activity streams like Facebook News Feed influence how people perceive their audience. News Feed shows items drawn from an individual’s friends’ activities, and they often have some reciprocal access. However, the items in the activity stream are likely unrepresentative of this potential and likely audience. “Lurkers” — people who consume but do not produce — are not as available to mind, and prolific producers are too available to mind for how often they are in the actual audience for some new shared content. This can, for example, lead to making self-disclosures that are not appropriate for the actual audience.

- This might not be the case, see Michael Zimmer and this New York Times article. [↩]

- Why don’t people do this in the first place? Many may not be aware of the feature, but even if they are, there are reasons not to use it. For example, it makes any participation in topical conversations (e.g., around a hashtag) difficult or impossible. [↩]

- Or at least this control would have to be via Twitter, likely before archiving: “We asked them [Twitter] to deal with the users; the library doesn’t want to mediate that.” [↩]

Not just predicting the present, but the future: Twitter and upcoming movies

Search queries have been used recently to “predict the present“, as Hal Varian has called it. Now some initial use of Twitter chatter to predict the future:

The chatter in Twitter can accurately predict the box-office revenues of upcoming movies weeks before they are released. In fact, Tweets can predict the performance of films better than market-based predictions, such as Hollywood Stock Exchange, which have been the best predictors to date. (Kevin Kelley)

Here is the paper by Asur and Huberman from HP Labs. Also see a similar use of online discussion forums.

But the obvious question from my previous post is, how much improvement do you get by adding more inputs to the model? That is, how does the combined Hollywood Stock Exchange and Twitter chatter model perform? The authors report adding the number of theaters the movie opens in to both models, but not combining them directly.

Persuasion profiling and genres: Fogg in 2006

Maurits Kaptein and I have recently been thinking a lot about persuasion profiling — estimating and adapting to individual differences in responses to influence strategies based on past behavior and other information. With help from students, we’ve been running experiments and building statistical models that implement persuasion profiling.

My thinking on persuasion profiling is very much in BJ Fogg’s footsteps, since he has been talking about persuasion profiling in courses, lab meetings, and personal discussions since 2004 or earlier.

Just yesterday, I came across this transcript of BJ’s presentation for an FTC hearing in 2006. I was struck at how much it anticipates some of what Maurits and I have written recently (more on this later). I’m sure I watched the draft video of the presentation back then and it’s influenced me, even if I forgot some of the details.

Here is the relevant excerpt from BJ’s comments for the FTC:

Persuasion profiling means that each one of us has a different set of persuasion strategies that affect us. Just like we like different types of food or are vulnerable to giving in to different types of food on a diet, we are vulnerable to different types of persuasion strategies.

On the food example, I love old-fashioned popcorn, and if I go to a party and somebody has old-fashioned popcorn, I will probably break down and eat it. On the persuasion side of things, I know I’m vulnerable to trying new things, to challenges and to anything that gets measured. If that’s proposed to me, I’m going to be vulnerable and I’m going to give it a shot.

Whenever we go to a Web site and use an interactive system, it is likely they will be capturing what persuasion strategies work on us and will be using those when we use the service again. The mapping out of what makes me tick, what motivates me can also be bought or sold, just like a credit report.

So imagine I’m going in to buy a new car and the person selling me the car downloads my credit report but also buys my persuasion profile. I may or may not know about this. Imagine if persuasion profiles are available on political campaigns so that when I visit a Web site, the system knows it is B.J. Fogg, and it changes [its] approach based on my vulnerabilities when it comes to persuasion.

Persuasive technology will touch our lives anywhere that we access digital products or services, in the car, in our living room, on the Web, through our mobile phones and so on. Persuasive technology will be all around us, and unlike other media types, where you have 30-second commercial or a magazine ad, you have genres you can understand, when it comes to computer-based persuasion, it is so flexible that it won’t have genre boundaries. It will come to us in the ordinary course of our lives, as we are working on a Web site, as we are editing a document, as we are driving a car. There won’t be clear markers about when you are being persuaded and when you are not.

This last paragraph is about the “genrelessness” of many persuasive technologies. This isn’t directly on the topic of persuasion profiling, but I see it as critically relevant. Persuasion profiling is likely to be most effective when invisible and undisclosed to users. From this and the lack of genre-based flags for persuasive technology it follows that we will frequently be “persuasion profiled” without knowing it.

Search terms and the flu: preferring complex models

Simplicity has its draws. A simple model of some phenomena can be quick to understand and test. But with the resources we have today for theory building and prediction, it is worth recognizing that many phenomena of interest (e.g., in social sciences, epidemiology) are very, very complex. Using a more complex model can help. It’s great to try many simple models along the way — as scaffolding — but if you have a large enough N in an observational study, a larger model will likely be an improvement.

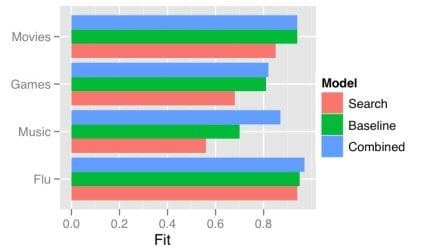

One obvious way a model gets more complex is by adding predictors. There has recently been a good deal of attention on using the frequency of search terms to predict important goings-on — like flu trends. Sharad Goel et al. (blog post, paper) temper the excitement a bit by demonstrating that simple models using other, existing public data sets outperform the search data. In some cases (music popularity, in particular), adding the search data to the model improves predictions: the more complex combined model can “explain” some of the variance not handled by the more basic non-search-data models.

This echos one big takeaway from the Netflix Prize competition: committees win. The top competitors were all large teams formed from smaller teams and their models were tuned combinations of several models. That is, the strategy is, take a bunch of complex models and combine them.

One way of doing this is just taking a weighted average of the predictions of several simpler models. This works quite well when your measure of the value of your model is root mean squared error (RMSE), since RMSE is convex.

While often the larger model “explains” more of the variance, what “explains” means here is just that the R-squared is larger: less of the variance is error. More complex models can be difficult to understand, just like the phenomena they model. We will continue to need better tools to understand, visualize, and evaluate our models as their complexity increases. I think the committee metaphor will be an interesting and practical one to apply in the many cases where the best we can do is use a weighted average of several simpler, pretty good models.

Reprioritizing human intelligence tasks for low latency and high throughput on Mechanical Turk

Amazon Mechanical Turk is a platform and market for human intelligence tasks (HITs) that are submitted by requesters and completed by workers (or “turkers”). Each HIT is associated with a payment, often a few cents. This post covers some basics of Mechanical Turk and shows its lack of designed-in support for dynamic reprioritization is problematic for some uses. I also mention some other factors that influence latency and throughput.

With mTurk one can create a HIT that asks someone to rate some search results for a query, evaluate the credibility of a Wikipedia article, draw a sheep facing left, enter names for a provided color, annotate a photo of a person with pose information, or create a storyboard illustrating a new product idea. So Mechanical Turk can be used in many ways for basic research, building a training set for machine learning, or actually enabling a (perhaps prototype) service in use through a kind of Wizard-of-Oz approach. Additionally, I’ve used mTurk to code images captured by participants in a lab experiment (more on this in another post or article).

When creating HITs, a requester can specify a QuestionForm (QF) (e.g., via command line tools or an SDK) that is then presented to the worker by Amazon. This can include images, free text answers, multiple choice, etc. Additionally one can embed Flash or Java objects in it. But the easiest way of creating HITs is to use a QF and not create a Java or Flash application of one’s own. This is especially true for HITs that are handled well by the basic question form. The other option is to create an ExternalQuestion (EQ), which is hosted on one’s own server and is displayed in an iFrame. This provides greater freedom but requires additional development and it is you that must host the page (though you can do so through Amazon’s S3). QF HITs (without embeds) also offer a familiar interface to workers (though it is possible to create a more efficient, custom interface by, e.g., making all the targets larger). So when possible, it is often preferable to use a QF rather than an EQ.

For some of the uses of mTurk for powering a service, it can be important to minimize latency for specific HITs1, including prioritizing particular new HITs over previously created HITs. For example, after some HIT has not been completed for a specific period after creation, it may still be important to complete it, but when it is completed may become less important. This can happen easily if the value of a HIT being completed has a sharp drop off after some time.

This should be done while maintaining high throughput; that is, you don’t want to reduce the rate at which your HITs are completed. When there are more HITs of the same type, workers can check a box to immediately start the next HIT of the same type when they submit the current one (see screenshot). Workers will often complete many HITs of the same type in a row. So throughput can drop substantially if any workers run out of HITs of the same type at any point: they may switch to another HIT type, or if they do your HITs once more appear, then there will be a delay. As we’ll see, these two requirements don’t seem to be well met by the platform — or at least certain uses of it.

Mechanical Turk does not provide a mechanism for prioritizing HITs of the same type, so without deleting all but particular high-priority HITs of that type, there is not a way to ensure that some particular HIT gets done before the rest. And deleting the other HITs would hurt throughput and increase latency for any new high-priority HITs added in the near future (since workers won’t simply start these once they finish their previous HITs).

EQ HITs allow one to avoid this problem. Unlike with QF HITs (without Flash and Java embeds), one does not have to specify the full content of the HIT in advance. When a worker accepts an EQ HIT, you can dynamically serve up the HIT you want to depending on changing priorities. But this means that you can’t take advantage of, e.g., the simplicity of creating and managing data from QF HITs. So though there are ways of coping, adding dynamic reprioritization to Mechanical Turk would be a boon for time-sensitive uses.

There are, of course, other factors that influence latency and throughput on mTurk when (EQ) HITs are reprioritized. Here are a few:

- HIT and sub-tasks duration. How long does it take for workers to complete a HIT, which may be composed of multiple sub-tasks? A worker cannot be assigned a new HIT until they complete (or reject) the previous one. This can be somewhat avoided by creating longer HITs that are subdivided into dynamically selected sub-tasks. This can be done with an EQ HIT or an embedded Flash or Java application in a QF HIT. But the sub-task duration is always a limiting factor, unless one is willing to force abortion of the current sub-task, replacing it will still in progress (with an EQ, Flash, or Java).

- Available workers. How many workers are logged into mTurk and completing task? How many are currently switching HIT types? This can vary with the time of day.

- Appeal of your HITs. How much do workers like your HITs — are they fun? How much do you pay for how much you ask? How many of their completed assignments do you approve?

- Reliability. How accurate or precise must your results be? How many workers do you need to complete a HIT before you have reliable results? Do other workers need to complete meta-HITs before the data can be used?

- I use the term HIT somewhat loosely in this article. There are at least three uses that each differ in their identity conditions. (1) There are HITs considered as human intelligence tasks, and thus divided as we divide tasks; this means that a HIT in another sense can be composed of multiple HITs in this sense (tasks or sub-tasks). (2) There are HITs in Amazon’s technical sense of the term: a HIT is something that has the same HIT ID and therefore has the same specification. In QF HITs without embeds, this means all instances (assignments) of a HIT are the same in content; but in EQ HIT this is not necessarily true, since the content can be determined when assigned. (3) Finally, there is what Amazon calls assignments, specific instances of a HITs that are only completed once. [↩]