Search terms and the flu: preferring complex models

Simplicity has its draws. A simple model of some phenomena can be quick to understand and test. But with the resources we have today for theory building and prediction, it is worth recognizing that many phenomena of interest (e.g., in social sciences, epidemiology) are very, very complex. Using a more complex model can help. It’s great to try many simple models along the way — as scaffolding — but if you have a large enough N in an observational study, a larger model will likely be an improvement.

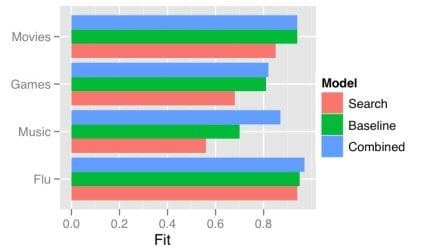

One obvious way a model gets more complex is by adding predictors. There has recently been a good deal of attention on using the frequency of search terms to predict important goings-on — like flu trends. Sharad Goel et al. (blog post, paper) temper the excitement a bit by demonstrating that simple models using other, existing public data sets outperform the search data. In some cases (music popularity, in particular), adding the search data to the model improves predictions: the more complex combined model can “explain” some of the variance not handled by the more basic non-search-data models.

This echos one big takeaway from the Netflix Prize competition: committees win. The top competitors were all large teams formed from smaller teams and their models were tuned combinations of several models. That is, the strategy is, take a bunch of complex models and combine them.

One way of doing this is just taking a weighted average of the predictions of several simpler models. This works quite well when your measure of the value of your model is root mean squared error (RMSE), since RMSE is convex.

While often the larger model “explains” more of the variance, what “explains” means here is just that the R-squared is larger: less of the variance is error. More complex models can be difficult to understand, just like the phenomena they model. We will continue to need better tools to understand, visualize, and evaluate our models as their complexity increases. I think the committee metaphor will be an interesting and practical one to apply in the many cases where the best we can do is use a weighted average of several simpler, pretty good models.

Multitasking among tasks that share a goal: action identification theory

Right from the start of today’s Media Multitasking Workshop1, it’s clear that one big issue is just what people are talking about when they talk about multitasking. In this post, I want to highlight the relationship between defining different kinds of multitasking and people’s representations of the hierarchical structure of action.

It is helpful to start with a contrast between two kinds of cases.

Distributing attention towards a single goal

In the first, there is a single task or goal that involves dividing one’s attention, with the targets of attention somehow related, but of course somewhat independent. Patricia Greenfield used Pac-Man as an example: each of the ghosts must be attended to (in addition to Pac-Man himself), and each is moving independently, but each is related to the same larger goal.

Distributing attention among different goals

In the second kind of case, there are two completely unrelated tasks that divide attention, as in playing a game (e.g., solitaire) while also attending to a speech (e.g., in person, on TV). Anthony Wagner noted that in Greenfield’s listing of the benefits and costs of media multitasking, most of the listed benefits applied to the former case, while the costs she listed applied to the later. So keeping these different senses of multitasking straight is important.

Complications

But the conclusion should not be to think that this is a clear and stable distinction that slices multitasking phenomena in just the right way. Consider one ways of putting this distinction: the primary and secondary task can either be directed at the same goal or directed at different goals (or tasks). Let’s dig into this a bit more.2

Byron Reeves pointed out that sometimes “the IMing is about the game.” So we could distinguish whether the goal of the IMing is the same as the goal of the in-game task(s). But this making this kind of distinction requires identity conditions for goals or tasks that enable this distinction. As Ulrich Mayr commented, goals can be at many different levels, so in order to use goal identity as the criterion, one has to select a level in the hierarchy of goals.

Action identities and multitasking

We can think about this hierarchy of goals as the network of identities for an action that are connected with the “by” relation: one does one thing by doing (several) other things. If these goals are the goals of the person as they represent them, then this is the established approach taken by action identification theory (Vallacher & Wegner, 1987) — and this could be valuable lens for thinking about this. Action identification theory claims that people can report an action identity for what they are doing, and that this identity is the “prepotent identity”. This prepotent identity is generally the highest level identity under which the action is maintainable. This means that the prepotent identity is at least somewhat problematic if used to make this distinction between these two types of multitasking because then the distinction would be dependent on, e.g., how automatic or functionally transparent the behaviors involved are.

For example, if I am driving a car and everything is going well, I may represent the action as “seeing my friend Dave”. I may also represent my simultaneous, coordinating phone call with Dave under this same identity. But if driving becomes more difficult, then my prepotent identity will decrease in level in order to maintain the action. Then these two tasks would not share the prepotent action identity.

Prepotent action identities (i.e. the goal of the behavior as represented by the person in the moment) do not work to make this distinction for all uses. But I think that it actually does help makes some good distinctions about the experience of multitasking, especially if we examine change in action identities over time.

To return to case of media multitasking, consider the headline ticker on 24-hour news television. The headline ticker can be more or less related to what the talking heads are going on about. This could be evaluated as a semantic, topical relationship. But considered as a relationship of goals — and thus action identities — we can see that perhaps sometimes the goals coincide even when the content is quite different. For example, my goal may simply to be “get the latest news”, and I may be able to actually maintain this action — consuming both the headline ticker and the talking heads’ statements — under this high level identity. This is an importantly different case then if I don’t actually maintain the action at the level, but instead must descend to — and switch between — two (or more) lower level identities that are associated the two streams of content.

References

Vallacher, R. R., & Wegner, D. M. (1987). What do people think they’re doing? Action identification and human behavior. Psychological Review, 94(1), 3-15.

- The full name is the “Seminar on the impacts of media multitasking on children’s learning and development”. [↩]

- As I was writing this, the topic re-emerged in the workshop discussion. I made some comments, but I think I may not have made myself clear to everyone. Hopefully this post is a bit of an improvement. [↩]

Being a lobster and using a hammer: “homuncular flexibility” and distal attribution

Jaron Lanier (2006) calls the ability of humans to learn to control virtual bodies that are quite different than our own “homuncular flexibility”. This is, for him, a dangerous idea. The idea is that the familiar mapping of the body represented in the cortical homunculus is only one option – we can flexibly act (and perceive) using quite other mappings, e.g., to virtual bodies. Your body can be tracked, and these movements can be used to control a lobster in virtual reality – just as one experiences (via head-mounted display, haptic feedback, etc.) the virtual space from the perspective of the lobster under your control.

This name and description makes this sound quite like science fiction. In this post, I assimilate homuncular flexibility to the much more general phenomenon of distal attribution (Loomis, 1992; White, 1970). When I have a perceptual experience, I can just as well attribute that experience – and take it as being directed at or about – more proximal or distal phenomena. For example, I can attribute it to my sensory surface, or I can attribute it to a flower in the distance. White (1970) proposed that more distal attribution occurs when the afference (perception) is lawfully related to efference (action) on the proximal side of that distal entity. That is, if my action and perception are lawfully related on “my side” of that entity in the causal tree, then I will make attributions to that entity. Loomis (1992) adds the requirement that this lawful relationship be successfully modeled. This is close, but not quite right, for if I can make distal attributions even in the absence of an actual lawful relationship that I successfully model, my (perhaps inaccurate) modeling of a (perhaps non-existent) lawful relationship will do just fine.

Just as I attribute a sensory experience to a flower and not the air between me and the flower, so the blind man or the skilled hammer-user can attribute a sensory experience to the ground or the nail, rather than the handle of the cane or hammer. On consideration, I think we can see that these phenomena are very much what Lanier is talking about. When I learn to operate (and, not treated by Lanier, 2006, sense) my lobster-body, it is because I have modeled an efference–afference relationship, yielding a kind of transparency. This is a quite familiar sort of experience. It might still be a quite dangerous or exciting idea, but its examples are ubiquitous, not restricted to virtual reality labs.

Lanier paraphrases biologist Jim Boyer as counting this capability as a kind of evolutionary artifact – a spandrel in the jargon of evolutionary theory. But I think a much better just-so evolutionary story can be given: it is this capability – to make distal attributions to the limits of the efference–afference relationships we successfully model – that makes us able to use tools so effectively. At an even more basic and general level, it is this capability that makes it possible for us to communicate meaningfully: our utterances have their meaning in the context of triangulating with other people such that the content of what we are saying is related to the common cause of both of our perceptual experiences (Davidson, 1984).

References

Davidson, D. (1984). Inquiries into Truth and Interpretation. Oxford: Clarendon Press.

Lanier, J. (2006). Homuncular flexibility. Edge.

Loomis, J. M. (1992). Distal attribution and presence. Presence: Teleoperators and Virtual Environments, 1(1), 113-119.

White, B. W. (1970). Perceptual findings with the vision-substitution system. IEEE Transactions on Man-Machine Systems, 11(1), 54-58.

Situational variation, attribution, and human-computer relationships

Mobile phones are gateways to our most important and enduring relationships with other people. But, like other communication technologies, the mobile phone is psychologically not only a medium: we also form enduring relationships with devices themselves and their associated software and services (Sundar 2004). While different than relationships with other people, these human–technology relationships are also importantly social relationships. People exhibit a host of automatic, social responses to interactive technologies by applying familiar social rules, categories, and norms that are otherwise used in interacting with people (Reeves and Nass 1996; Nass and Moon 2000).

These human–technology relationships develop and endure over time and through radical changes in the situation. In particular, mobile phones are near-constant companions. They take on roles of both medium for communication with other people and independent interaction partner through dynamic physical, social, and cultural environments and tasks. The global phenomenon of mobile phone use highlights both that relationships with people and technologies are influenced by variable context and that these devices are, in some ways, a constant in amidst these everyday changes.

Situational variation and attribution

Situational variation is important for how people understand and interact with mobile technology. This variation is an input to the processes by which people disentangle the internal (personal or device) and external (situational) causes of an social entity’s behavior (Fiedler et al. 1999; Forsterling 1992; Kelley 1967), so this situational variation contributes to the traits and states attributed to human and technological entities. Furthermore, situational variation influences the relationship and interaction in other ways. For example, we have recently carried out an experiment providing evidence that this situational variation itself (rather than the characteristics of the situations) influences memory, creativity, and self-disclosure to a mobile service; in particular, people disclose more in places they have previously disclosed to the service, than in new places (Sukumaran et al. 2009).

Not only does the situation vary, but mobile technologies are increasingly responsive to the environments they share with their human interactants. A system’s systematic and purposive responsiveness to the environment means means that explaining its behavior is about more than distinguishing internal and external causes: people explain behavior by attributing reasons to the entity, which may trivially either refer to internal or external causes. For example, contrast “Jack bought the house because it was secluded” (external) with “Jack bought the house because he wanted privacy” (internal) (Ross 1977, p. 176). Much research in the social cognition and attribution theory traditions of psychology has failed to address this richness of people’s everyday explanations of other ’s behavior (Malle 2004; McClure 2002), but contemporary, interdisciplinary work is elaborating on theories and methods from philosophy and developmental psychology to this end (e.g., the contributions to Malle et al. 2001).

These two developments — the increasing role of situational variation in human-technology relationships and a new appreciation of the richness of everyday explanations of behavior — are important to consider together in designing new research in human-computer interaction, psychology, and communication. Here are three suggestions about directions to pursue in light of this:

Design systems that provide constancy and support through radical situational changes in both the social and physical environment. For example, we have created a system that uses the voices of participants in an upcoming event as audio primes during transition periods (Sohn et al. 2009). This can help ease the transition from a long corporate meeting to a chat with fellow parents at a child’s soccer game.

Design experimental manipulations and measure based on features of folk psychology — the implicit theory or capabilities by which we attribute, e.g., beliefs, thoughts, and desires (propositional attitudes) to others (Dennett 1987) — identified by philosophers. For example, attributions propositional attitudes (e.g., beliefs) to an entity have the linguistic feature that one cannot substitute different terms that refer to the same object while maintaining the truth or appropriateness of the statement. This opacity in attributions of propositional attitudes is the subject of a large literature (e.g., following Quine 1953), but this has not been used as a lens for much empirical work, except for some developmental psychology (e.g., Apperly and Robinson 2003). Human-computer interaction research should use this opacity (and other underused features of folk psychology) in studies of how people think about systems.

Connect work on mental models of systems (e.g., Kempton 1986; Norman 1988) to theories of social cognition and folk psychology. I think we can expect much larger overlap in the process involved than in the current research literature: people use folk psychology to understand, predict, and explain technological systems — not just other people.

References

Apperly, I. A., & Robinson, E. J. (2003). When can children handle referential opacity? Evidence for systematic variation in 5- and 6-year-old children’s reasoning about beliefs and belief reports. Journal of Experimental Child Psychology, 85(4), 297-311. doi: 10.1016/S0022-0965(03)00099-7.

Dennett, D. C. (1987). The Intentional Stance (p. 388). MIT Press.

Fiedler, K., Walther, E., & Nickel, S. (1999). Covariation-based attribution: On the ability to assess multiple covariates of an effect. Personality and Social Psychology Bulletin, 25(5), 609.

Försterling, F. (1992). The Kelley model as an analysis of variance analogy: How far can it be taken? Journal of Experimental Social Psychology, 28(5), 475-490. doi: 10.1016/0022-1031(92)90042-I.

Kelley, H. H. (1967). Attribution theory in social psychology. In Nebraska Symposium on Motivation (Vol. 15).

Malle, B. F. (2004). How the Mind Explains Behavior: Folk Explanations, Meaning, and Social Interaction. Bradford Books.

Malle, B. F., Moses, L. J., & Baldwin, D. A. (2001). Intentions and Intentionality: Foundations of Social Cognition. MIT Press.

McClure, J. (2002). Goal-Based Explanations of Actions and Outcomes. In M. H. Wolfgang Stroebe (Ed.), European Review of Social Psychology (pp. 201-235). John Wiley & Sons, Inc. Retrieved from http://dx.doi.org/10.1002/0470013478.ch7.

Nass, C., & Moon, Y. (2000). Machines and Mindlessness: Social Responses to Computers. Journal of Social Issues, 56(1), 81-103.

Norman, D. A. (1988). The Psychology of Everyday Things. New York: Basic Books.

Quine, W. V. O. (1953). From a Logical Point of View: Nine Logico-Philosophical Essays. Harvard University Press.

Reeves, B., & Nass, C. (1996). The media equation: how people treat computers, television, and new media like real people and places (p. 305). Cambridge University Press.

Ross, L. (1977). The intuitive psychologist and his shortcomings: Distortions in the attribution process. In L. Berkowitz (Ed.), Advances in Experimental Social Psychology (Vol. 10, pp. 174-221). New York: Academic Press.

Sohn, T., Takayama, L., Eckles, D., & Ballagas, R. (2009). Auditory Priming for Upcoming Events. Forthcoming in CHI ’09 extended abstracts on Human factors in computing systems. Boston, Massachusetts, United States: ACM Press.

Sukumaran, A., Ophir, E., Eckles, D., & Nass, C. I. (2009). Variable Environments in Mobile Interaction Aid Creativity but Impair Learning and Self-disclosure. To be presented at the Association for Psychological Science Convention, San Francisco, California.

Sundar, S. S. (2004). Loyalty to computer terminals: is it anthropomorphism or consistency? Behaviour & Information Technology, 23(2), 107-118.

Reprioritizing human intelligence tasks for low latency and high throughput on Mechanical Turk

Amazon Mechanical Turk is a platform and market for human intelligence tasks (HITs) that are submitted by requesters and completed by workers (or “turkers”). Each HIT is associated with a payment, often a few cents. This post covers some basics of Mechanical Turk and shows its lack of designed-in support for dynamic reprioritization is problematic for some uses. I also mention some other factors that influence latency and throughput.

With mTurk one can create a HIT that asks someone to rate some search results for a query, evaluate the credibility of a Wikipedia article, draw a sheep facing left, enter names for a provided color, annotate a photo of a person with pose information, or create a storyboard illustrating a new product idea. So Mechanical Turk can be used in many ways for basic research, building a training set for machine learning, or actually enabling a (perhaps prototype) service in use through a kind of Wizard-of-Oz approach. Additionally, I’ve used mTurk to code images captured by participants in a lab experiment (more on this in another post or article).

When creating HITs, a requester can specify a QuestionForm (QF) (e.g., via command line tools or an SDK) that is then presented to the worker by Amazon. This can include images, free text answers, multiple choice, etc. Additionally one can embed Flash or Java objects in it. But the easiest way of creating HITs is to use a QF and not create a Java or Flash application of one’s own. This is especially true for HITs that are handled well by the basic question form. The other option is to create an ExternalQuestion (EQ), which is hosted on one’s own server and is displayed in an iFrame. This provides greater freedom but requires additional development and it is you that must host the page (though you can do so through Amazon’s S3). QF HITs (without embeds) also offer a familiar interface to workers (though it is possible to create a more efficient, custom interface by, e.g., making all the targets larger). So when possible, it is often preferable to use a QF rather than an EQ.

For some of the uses of mTurk for powering a service, it can be important to minimize latency for specific HITs1, including prioritizing particular new HITs over previously created HITs. For example, after some HIT has not been completed for a specific period after creation, it may still be important to complete it, but when it is completed may become less important. This can happen easily if the value of a HIT being completed has a sharp drop off after some time.

This should be done while maintaining high throughput; that is, you don’t want to reduce the rate at which your HITs are completed. When there are more HITs of the same type, workers can check a box to immediately start the next HIT of the same type when they submit the current one (see screenshot). Workers will often complete many HITs of the same type in a row. So throughput can drop substantially if any workers run out of HITs of the same type at any point: they may switch to another HIT type, or if they do your HITs once more appear, then there will be a delay. As we’ll see, these two requirements don’t seem to be well met by the platform — or at least certain uses of it.

Mechanical Turk does not provide a mechanism for prioritizing HITs of the same type, so without deleting all but particular high-priority HITs of that type, there is not a way to ensure that some particular HIT gets done before the rest. And deleting the other HITs would hurt throughput and increase latency for any new high-priority HITs added in the near future (since workers won’t simply start these once they finish their previous HITs).

EQ HITs allow one to avoid this problem. Unlike with QF HITs (without Flash and Java embeds), one does not have to specify the full content of the HIT in advance. When a worker accepts an EQ HIT, you can dynamically serve up the HIT you want to depending on changing priorities. But this means that you can’t take advantage of, e.g., the simplicity of creating and managing data from QF HITs. So though there are ways of coping, adding dynamic reprioritization to Mechanical Turk would be a boon for time-sensitive uses.

There are, of course, other factors that influence latency and throughput on mTurk when (EQ) HITs are reprioritized. Here are a few:

- HIT and sub-tasks duration. How long does it take for workers to complete a HIT, which may be composed of multiple sub-tasks? A worker cannot be assigned a new HIT until they complete (or reject) the previous one. This can be somewhat avoided by creating longer HITs that are subdivided into dynamically selected sub-tasks. This can be done with an EQ HIT or an embedded Flash or Java application in a QF HIT. But the sub-task duration is always a limiting factor, unless one is willing to force abortion of the current sub-task, replacing it will still in progress (with an EQ, Flash, or Java).

- Available workers. How many workers are logged into mTurk and completing task? How many are currently switching HIT types? This can vary with the time of day.

- Appeal of your HITs. How much do workers like your HITs — are they fun? How much do you pay for how much you ask? How many of their completed assignments do you approve?

- Reliability. How accurate or precise must your results be? How many workers do you need to complete a HIT before you have reliable results? Do other workers need to complete meta-HITs before the data can be used?

- I use the term HIT somewhat loosely in this article. There are at least three uses that each differ in their identity conditions. (1) There are HITs considered as human intelligence tasks, and thus divided as we divide tasks; this means that a HIT in another sense can be composed of multiple HITs in this sense (tasks or sub-tasks). (2) There are HITs in Amazon’s technical sense of the term: a HIT is something that has the same HIT ID and therefore has the same specification. In QF HITs without embeds, this means all instances (assignments) of a HIT are the same in content; but in EQ HIT this is not necessarily true, since the content can be determined when assigned. (3) Finally, there is what Amazon calls assignments, specific instances of a HITs that are only completed once. [↩]