Search queries in referrer headers: Technical knowledge, privacy, and the status quo

I have been fascinated by Christopher Soghoian‘s complaint to the FTC about Google’s practices of including search query information in the HTTP referrer header.

In summary, Google has taken proactive efforts to ensure that Web site owners that get visitors from Google search receive the search terms entered by Google’s users. Meanwhile, Google has agreed that search query data is personally sensitive information and that it does not disclosure this information, except under specific, limited circumstances; this is reflected in its privacy policy. Note that Google has not just let the URL do the work, but has specifically worked to make the referrer header include search terms (and additional information) when it has adopted techniques that would otherwise prevent these disclosures from being made. (For a fuller summary, see his blog post and this WSJ article. Or this article at Search Engine Land.)

I am not going to discuss the ethics and legal issues in this particular case. Instead, I just want to draw attention to how this issue reveals the importance of technical knowledge in thinking about privacy issues.

A common response from people working in the Internet industry is that Soghoian is a non-techie that has suddenly “discovered” referrer headers. For example, Danny Sullivan writes “former FTC employee discovers browsers sends referrer strings, turns it into google conspiracy”. (Of course, Soghoian is actually technically savvy, as reading the complaint to the FTC makes clear.)

What’s going on here? Folks with technical knowledge perceive search query disclosure as the status quo (though I bet most don’t often think about the consequences of clicking on a link after a sensitive search).

But how would most Internet users be aware of this? Certainly not through Google’s statements, or through warnings from Web browsers. One of the few ways I think users might realize this is happening is through query-highlighting — on forums, mailing list archives, and spammy pages. So a super-rational user who cares to think about how that works, might guess something like this is going on. But I doubt most users would actively work out the mechanisms involved. Futhermore, their observations likely radically underdetermine the mechanism anyway, since it is quite reasonable that a Web browser could do this kind of highlighting directly, especially for formulaic sites, like forums. Even casual use of Web analytics software (such as Google Analytics) may not make it clear that this per-user information is being provided, since aggregated data could reasonably be used to present summaries of top search queries leading to a Web site.1

This should be a reminder why empirical studies of privacy attitudes and behaviors are useful: us techie folks often have severe blind spots. I don’t know that this is just a matter of differences in expectations, but rather involves differences in preferences. Over time, these expectations change our sense of the status quo, from which we can calibrate our preferences and intentions.

Google has worked to ensure that referrer headers continue to include search query information — even as it adopts techniques that would make this not happen simply by the standard inclusion of the URL there.2 A difference in beliefs about the status quo puts these actions by Google in a different context. For us techies, that is just maintaining the status quo (which may seem more desirable, since we know it’s the industry-wide standard). For others, it might seem more like Google putting advertisers and Web site owners above its promises to its users about their sensitive data.

Aardvark’s use of Wizard of Oz prototyping to design their social interfaces

The Wall Street Journal’s Venture Capital Dispatch reports on how Aardvark, the social question asking and answering service recently acquired by Google, used a Wizard of Oz prototype to learn about how their service concept would work without building all the tech before knowing if it was any good.

Aardvark employees would get the questions from beta test users and route them to users who were online and would have the answer to the question. This was done to test out the concept before the company spent the time and money to build it, said Damon Horowitz, co-founder of Aardvark, who spoke at Startup Lessons Learned, a conference in San Francisco on Friday.

“If people like this in super crappy form, then this is worth building, because they’ll like it even more,” Horowitz said of their initial idea.

At the same time it was testing a “fake” product powered by humans, the company started building the automated product to replace humans. While it used humans “behind the curtain,” it gained the benefit of learning from all the questions, including how to route the questions and the entire process with users.

This is a really good idea, as I’ve argued before on this blog and in a chapter for developers of mobile health interventions. What better way to (a) learn about how people will use and experience your service and (b) get training data for your machine learning system than to have humans-in-the-loop run the service?

My friend Chris Streeter wondered whether this was all done by Aardvark employees or whether workers on Amazon Mechanical Turk may have also been involved, especially in identifying the expertise of the early users of the service so that the employees could route the questions to the right place. I think this highlights how different parts of a service can draw on human and non-human intelligence in a variety of ways — via a micro-labor market, using skilled employees who will gain hands-on experience with customers, etc.

I also wonder what UIs the humans-in-the-loop used to accomplish this. It’d be great to get a peak. I’d expect that these were certainly rough around the edges, as was the Aardvark customer-facing UI.

Aardvark does a good job of being a quite sociable agent (e.g., when using it via instant messaging) that also gets out of the way of the human–human interaction between question askers and answers. I wonder how the language used by humans to coordinate and hand-off questions may have played into creating a positive para-social interaction with vark.

Not just predicting the present, but the future: Twitter and upcoming movies

Search queries have been used recently to “predict the present“, as Hal Varian has called it. Now some initial use of Twitter chatter to predict the future:

The chatter in Twitter can accurately predict the box-office revenues of upcoming movies weeks before they are released. In fact, Tweets can predict the performance of films better than market-based predictions, such as Hollywood Stock Exchange, which have been the best predictors to date. (Kevin Kelley)

Here is the paper by Asur and Huberman from HP Labs. Also see a similar use of online discussion forums.

But the obvious question from my previous post is, how much improvement do you get by adding more inputs to the model? That is, how does the combined Hollywood Stock Exchange and Twitter chatter model perform? The authors report adding the number of theaters the movie opens in to both models, but not combining them directly.

Search terms and the flu: preferring complex models

Simplicity has its draws. A simple model of some phenomena can be quick to understand and test. But with the resources we have today for theory building and prediction, it is worth recognizing that many phenomena of interest (e.g., in social sciences, epidemiology) are very, very complex. Using a more complex model can help. It’s great to try many simple models along the way — as scaffolding — but if you have a large enough N in an observational study, a larger model will likely be an improvement.

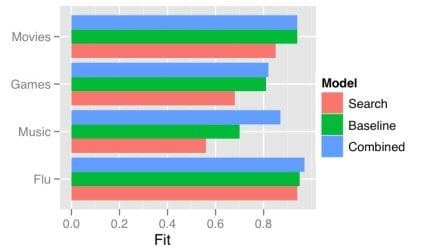

One obvious way a model gets more complex is by adding predictors. There has recently been a good deal of attention on using the frequency of search terms to predict important goings-on — like flu trends. Sharad Goel et al. (blog post, paper) temper the excitement a bit by demonstrating that simple models using other, existing public data sets outperform the search data. In some cases (music popularity, in particular), adding the search data to the model improves predictions: the more complex combined model can “explain” some of the variance not handled by the more basic non-search-data models.

This echos one big takeaway from the Netflix Prize competition: committees win. The top competitors were all large teams formed from smaller teams and their models were tuned combinations of several models. That is, the strategy is, take a bunch of complex models and combine them.

One way of doing this is just taking a weighted average of the predictions of several simpler models. This works quite well when your measure of the value of your model is root mean squared error (RMSE), since RMSE is convex.

While often the larger model “explains” more of the variance, what “explains” means here is just that the R-squared is larger: less of the variance is error. More complex models can be difficult to understand, just like the phenomena they model. We will continue to need better tools to understand, visualize, and evaluate our models as their complexity increases. I think the committee metaphor will be an interesting and practical one to apply in the many cases where the best we can do is use a weighted average of several simpler, pretty good models.

Keyword searching papers citing a highly-cited paper with Google Scholar

[Update: Google Scholar now directly supports this feature, check the box right below the search box after clicking “Cited by…”.]

In finding relevant research, once one has found something interesting, it can be really useful to do “reverse citation” searches.

Google Scholar is often my first stop when finding research literature (and for general search), and it has this feature — just click “Cited by 394”. But it is not very useful when your starting point is highly cited. What I often want to do is to do a keyword search of the papers that cite my highly-cited starting point.

While there is no GUI for this search within these results in Google Scholar, you can actually do it by hacking the URL. Just add the keyword query to the URL.

This is the URL one gets for all resources Google has as citing Allport’s “Attitudes” (1935):

http://scholar.google.com/scholar?cites=9150707851480450787&hl=en

And this URL searches within those for “indispensable concept”:

http://scholar.google.com/scholar?hl=en&cites=9150707851480450787&q=indispensable+concept

In this particular case, this gives us many examples of authors citing Allport’s comment that the attitude is the most distinctive and indispensable concept in social psychology. This example highlights that this can even just help get more useful “snippets” in the search results, even if it doesn’t narrow down the results much.

I find this useful in many cases. Maybe you will also.