Ethical persuasion profiling?

Persuasion profiling — estimating the effects of available influence strategies on an individual and adaptively selecting the strategies to use based on these estimates — sounds a bit scary. For many, ‘persuasion’ is a dirty word and ‘profiling’ generally doesn’t have positive connotations; together they are even worse! So why do we use this label?

In fact, Maurits Kaptein and I use this term, coined by BJ Fogg, precisely because it sounds scary. We see the potential for quite negative consequences of persuasion profiling, so we try to alert our readers to this.1

On the other hand, we also think that, not only is persuasion profiling sometimes beneficial, but there are cases where choosing not to adapt to individual differences in this way might itself be unethical.2 If a company marketing a health intervention knows that there is substantial variety in how people respond to the strategies used in the intervention — such that while the intervention has positive effects on average, it has negative effects for some — it seems like they have two ethical options.

First, they can be honest about this in their marketing, reminding consumers that it doesn’t work for everyone or even trying to market it to people it is more likely to work for. Or they could make this interactive intervention adapt to individuals — by persuasion profiling.

Actually for the first option to really work, the company needs to at least model how these responses vary by observable and marketable-to characteristics (e.g., demographics). And it may be that this won’t be enough if there is too much heterogeneity: even within some demographic buckets, the intervention may have negative effects for a good number of would-be users. On the other hand, by implementing persuasion profiling, the intervention will help more people, and the company will be able to market it more widely — and more ethically.

A simplified example that is somewhat compelling to me at least, but certainly not airtight. In another post, I’ll describe how somewhat foreseeable, but unintended, consequences should also give one pause.

- You might say we tried to build in a warning for anyone discussing or promoting this work. [↩]

- We argue this in the paper we presented at Persuasive Technology 2010. The text below reprises some of what we said about our “Example 4” in that paper.

Kaptein, M. & Eckles, D. (2010). Selecting effective means to any end: Futures and ethics of persuasion profiling. Proceedings of Persuasive Technology 2010, Lecture Notes in Computer Science. Springer. [↩]

Political arithmetic: The Joy of Stats

The Joy of Stats with Hans Rosling is quite engaging — and worth watching. I really enjoyed the historical threads running through the piece. I think he’s right to emphasize how data collection by states — to understand and control their populations — is at the origin of statistics. With increasing data collection today, this is a powerful and necessary reminder of the range of ends to which data analysis can be put.

Like others, I found the scenes with Rosling behind a bubble plot made difficult by the distracting lights and windows in the background. And the ending — with analyzing “what it means to be human” — was a bit much for me. But a small complaint about a compelling view.

Homophily and peer influence are messy business

Some social scientists have recently been getting themselves into trouble (and limelight) claiming that they have evidence of direct and indirect “contagion” (peer influence effects) in obesity, happiness, loneliness, etc. Statisticians and methodologists — and even science journalists — have pointed out their troubles. In observational data, peer influence effects are confounded with those of homophily and common external causes. That is, people are similar to other people in their social neighborhood because ties are more likely to form between similar people, and many external events that could cause the outcome are localized in networks (e.g., fast food restaurant opens down the street).

Econometricians1 have worked out the conditions necessary for peer influence effects to be identifiable.2 Very few studies have plausibly satisfied these requirements. But even if an investigator meets these requirements, it is worth remembering that homophily and peer influence are still tricky to think about — let along produce credible quantitative estimates of.

As Andrew Gelman notes, homophily can depend on network structure and information cascades (a kind of peer influence effect) to enable the homophilous relationships to form. Likewise, the success or failure of influence in a relationship can affect that relationship. For example, once I convert you to my way of thinking — let’s say, about climate change, we’ll be better friends. To me, it seems like some of the downstream consequences of our similarity should be attributed to peer influence. If I get fat and so you do, it could be peer influence in many ways: maybe that’s because I convinced you that owning a propane grill is more environmentally friendly (and then we both ended up grilling a lot more red meat). Sounds like peer influence to me. But it’s not that me getting fat caused you to.

Part of the problem here is looking only at peer influence effects in a single behavior or outcome at once. I look forward to the “clear thinking and adequate data” (Manski) that will allow us to better understand these processes in the future. Until then: scientists, please at least be modest in your claims and radical policy recommendations. This is messy business.

- They do statistics but speak a different language than big “S” statisticians — kind of like machine learning folks. [↩]

- For example, see Manski, C. F. (2000). Economic analysis of social interactions. Journal of Economic Perspectives, 14(3):115–136. Economists call peer influence effects endogenous interactions and contextual interactions. [↩]

Search terms and the flu: preferring complex models

Simplicity has its draws. A simple model of some phenomena can be quick to understand and test. But with the resources we have today for theory building and prediction, it is worth recognizing that many phenomena of interest (e.g., in social sciences, epidemiology) are very, very complex. Using a more complex model can help. It’s great to try many simple models along the way — as scaffolding — but if you have a large enough N in an observational study, a larger model will likely be an improvement.

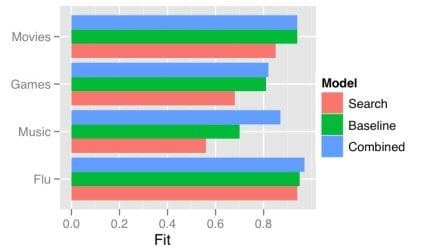

One obvious way a model gets more complex is by adding predictors. There has recently been a good deal of attention on using the frequency of search terms to predict important goings-on — like flu trends. Sharad Goel et al. (blog post, paper) temper the excitement a bit by demonstrating that simple models using other, existing public data sets outperform the search data. In some cases (music popularity, in particular), adding the search data to the model improves predictions: the more complex combined model can “explain” some of the variance not handled by the more basic non-search-data models.

This echos one big takeaway from the Netflix Prize competition: committees win. The top competitors were all large teams formed from smaller teams and their models were tuned combinations of several models. That is, the strategy is, take a bunch of complex models and combine them.

One way of doing this is just taking a weighted average of the predictions of several simpler models. This works quite well when your measure of the value of your model is root mean squared error (RMSE), since RMSE is convex.

While often the larger model “explains” more of the variance, what “explains” means here is just that the R-squared is larger: less of the variance is error. More complex models can be difficult to understand, just like the phenomena they model. We will continue to need better tools to understand, visualize, and evaluate our models as their complexity increases. I think the committee metaphor will be an interesting and practical one to apply in the many cases where the best we can do is use a weighted average of several simpler, pretty good models.

Texting 4 Health conference in review

As I blogged already, I attended and spoke at the first Texting 4 Health conference at Stanford University last week. You can see my presentation slides at SlideShare here, and the program, with links to the slides for most speakers is here.

The conference was very interesting, and there was quite the mix of participants — both speakers and others. There were medical school faculty, business people, people from NGOs and foundations, technologists, representatives of government agencies and centers, futurists, and social scientists. Everyone had something to learn — I know I did. This also made it somewhat difficult as a speaker because it is hard to know how best to reach, inform, and hold the interest of such a diverse audience: what is common ground with some is entirely new territory with others.

I think my favorite session was “Changing Health Behavior via SMS”. The methods used by the panelists to evaluate their interventions were both interesting to reflect on and good tools for persuading me of the importance and effectiveness of their work. One of my reflections was about what factors to vary in doing experiments on health interventions: there is (reasonable) focus on having a no-SMS control condition, and there are very few studies with manipulations of dimensions more fine-grained. Of course, the field is young and I understand how important true controls are in medical domains, but I think that real progress in understanding mobile messaging and designing effective interventions will require looking at more subtle and theoretically valuable manipulations.

You can see other posts about the conference here and here. And the conference Web site is also starting a blog to watch in the future.