Against between-subjects experiments

A less widely known reason for using within-subjects experimental designs in psychological science. In a within-subjects experiment, each participant experiences multiple conditions (say, multiple persuasive messages), while in a between-subjects experiment, each participant experiences only one condition.

If you ask a random social psychologist, “Why would you run a within-subjects experiment instead of a between-subjects experiments?”, the most likely answer is “power” — within-subjects experiments provide more power. That is, with the same number of participants, within-subjects experiments allow investigators to more easily tell that observed differences between conditions are not due to chance.1

Why do within-subjects experiments increase power? Because responses by the same individual are generally dependent; more specifically, they are often positively correlated. Say an experiment involves evaluating products, people, or policy proposals under different conditions, such as the presence of different persuasive cues or following different primes. It is often the case that participants who rate an item high on a scale under one condition will rate other items high on that scale under other condition. Or participants with short response times for one task will have relatively short response times for another task. Et cetera. This positive association might be due to stable characteristics of people or transient differences such as mood. Thus, the increase in power is due to heterogeneity in how individuals respond to the stimuli.

However, this advantage of within-subjects designs is frequently overridden in social psychology by the appeal of between-subjects designs. The latter are widely regarded as “cleaner” as they avoid carryover effects — in which one condition may effect responses to subsequent conditions experienced by the same participant. They can also be difficult to design when studies involve deception — even just deception about the purpose of the study — and one-shot encounters. Because of this, between-subjects designs are much more common in social psychology than within-subjects designs: investigators don’t regard the complexity of conducting within-subjects designs as worth it for the gain in power, which they regard as the primary advantage of within-subjects designs.

I want to point out another — but related — reason for using within-subjects designs: between-subjects experiments often do not allow consistent estimation of the parameters of interest. Now, between-subjects designs are great for estimating average treatment effects (ATEs), and ATEs can certainly be of great interest. For example, if one is interested how a design change to a web site will effect sales, an ATE estimated from an A-B test with the very same population will be useful. But this isn’t enough for psychological science for two reasons. First, social psychology experiments are usually very different from the circumstances of potential application: the participants are undergraduate students in psychology and the manipulations and situations are not realistic. So the ATE from a psychology experiment might not say much about the ATE for a real intervention. Second, social psychologists regard themselves as building and testing theories about psychological processes. By their nature, psychological processes occur within individuals. So an ATE won’t do — in fact, it can be a substantially biased estimate of the psychological parameter of interest.

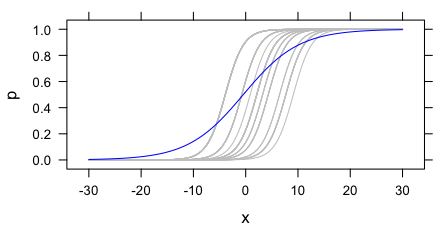

To illustrate this problem, consider an example where the outcome of an experiment is whether the participant says that a job candidate should be hired. For simplicity, let’s say this is a binary outcome: either they say to hire them or not. Their judgements might depend on some discrete scalar X. Different participants may have different thresholds for hiring the applicant, but otherwise be effected by X in the same way. In a logistic model, that is, each participant has their own intercept but all the slopes are the same. This is depicted with the grey curves below.2

These grey curves can be estimated if one has multiple observations per participant at different values of X. However, in a between-subjects experiment, this is not the case. As an estimate of a parameter of the psychological process common to all the participants, the estimated slope from a between-subjects experiment will be biased. This is clear in the figure above: the blue curve (the marginal expectation function) is shallower than any of the individual curves.

More generally, between-subjects experiments are good for estimating ATEs and making striking demonstrations. But they are often insufficient for investigating psychological processes since any heterogeneity — even only in intercepts — produces biased estimates of the parameters of psychological processes, including parameters that are universal in the population.

I see this as a strong motivation for doing more within-subjects experiments in social psychology. Unlike the power motivation for within-subjects designs, this isn’t solved by getting a larger sample of individuals. Instead, investigators need to think carefully about whether their experiments estimate any quantity of interest when there is substantial heterogeneity — as there generally is.3

- And to more precisely estimate these differences. Though social psychologist often don’t care about estimation, since many social psychological theories are only directional. [↩]

- This example is very directly inspired by Alan Agresti’s Categorical Data Analysis, p. 500. [↩]

- The situation is made a bit “better” by the fact that social psychologists are often only concerned with determining the direction of effects, so maybe aren’t worried that their estimates of parameters are biased. Of course, this is a problem in itself if the direction of the effect varies by individual. Here I have only treated the simpler case of universal function subject to a random shift. [↩]

Marginal evidence for psychological processes

Some comments on problems with investigating psychological processes using estimates of average (i.e. marginal) effects. Hence the play on words in the title.

Social psychology makes a lot of being theoretical. This generally means not just demonstrating an effect, but providing evidence about the psychological processes that produce it. Psychological processes are, it is agreed, intra-individual processes. To tell a story about a psychological process is to posit something going on “inside” people. It is quite reasonable that this is how social psychology should work — and it makes it consistent with much of cognitive psychology as well.

But the evidence that social psychology uses to support these theories about these intra-individual processes is largely evidence about effects of experimental conditions (or, worse, non-manipulated measures) averaged across many participants. That is, it is using estimates of marginal effects as evidence of conditional effects. This is intuitively problematic. Now, there is no problem when using experiments to study effects and processes that are homogenous in the population. But, of course, they aren’t: heterogeneity abounds. There is variation in how factors affect different people. This is why the causal inference literature has emphasized the differences among the average treatment effect, (average) treatment effect on the treated, local average treatment effect, etc.

Not only is this disconnect between marginal evidence and conditional theory trouble in the abstract, we know it has already produced many problems in the social psychology literature.1 Baron and Kenny (1986) is the most cited paper published in the Journal of Personality and Social Psychology, the leading journal in the field. It paints an rosy picture of what it is like to investigate psychological processes. The methods of analysis it proposes for investigating processes are almost ubiquitous in social psych.2 The trouble is that this approach is severely biased in the face of heterogeneity in the processes under study. This is usually described as problem of correlated error terms, omitted-variables bias, or adjusting for post-treatment variables. This is all true. But, in the most common uses, it is perhaps more natural to think of it as a problem of mixing up marginal (i.e. average) and conditional effects.3

What’s the solution? First, it is worth saying that average effects are worth investigating! Especially if you are evaluating a intervention or drug that might really be used — or if you are working at another level of analysis than psychology. But if psychological processes are your thing, you must do better.

Social psychologists sometimes do condition on individual characteristics, but often this is a measure of a single trait (e.g., need for cognition) that cannot plausibly exhaust all (or even much) of the heterogeneity in the effects under study. Without much larger studies, they cannot condition on more characteristics because of estimation problems (too many parameters for their N). So there is bound to be substantial heterogeneity.

Beyond this, I think social psychology could benefit from a lot more within-subjects experiments. Modern statistical computing (e.g., tools for fitting mixed-effects or multilevel models) makes it possible — even easy — to use such data to estimate effects of the manipulated factors for each participant. If they want to make credible claims about processes, then within-subjects designs — likely with many measurements of each person — are a good direction to more thoroughly explore.

- The situation is bad enough that I (and some colleagues) certainly don’t even take many results in social psych as more than providing a possibly interesting vocabulary. [↩]

- Luckily, my sense is that they are waning a bit, partially because of illustrations of the method’s bias. [↩]

- To translate to the terms used before, note that we want to condition on unobserved (latent) heterogeneity. If one doesn’t, then there is omitted variable bias. This can be done with models designed for this purpose, such as random effects models. [↩]

Traits, adaptive systems & dimensionality reduction

Psychologists have posited numerous psychological traits and described causal roles they ought to play in determining human behavior. Most often, the canonical measure of a trait is a questionnaire. Investigators obtain this measure for some people and analyze how their scores predict some outcomes of interest. For example, many people have been interested in how psychological traits affect persuasion processes. Traits like need for cognition (NFC) have been posited and questionnaire items developed to measure them. Among other things, NFC affects how people respond to messages with arguments for varying quality.

How useful are these traits for explanation, prediction, and adaptive interaction? I can’t address all of this here, but I want to sketch an argument for their irrelevance to adaptive interaction — and then offer a tentative rejoinder.

Interactive technologies can tailor their messages to the tastes and susceptibilities of the people interacting with and through them. It might seem that these traits should figure in the statistical models used to make these adaptive selections. After all, some of the possible messages fit for, e.g., coaching a person to meet their exercise goals are more likely to be effective for low NFC people than high NFC people, and vice versa. However, the standard questionnaire measures of NFC cannot often be obtained for most users — certainly not in commerce settings, and even people signing up for a mobile coaching service likely don’t want to answer pages of questions. On the other hand, some Internet and mobile services have other abundant data available about their users, which could perhaps be used to construct an alternative measure of these traits. The trait-based-adaptation recipe is:

- obtain the questionnaire measure of the trait for a sample,

- predict this measure with data available for many individuals (e.g., log data),

- use this model to construct a measure for out-of-sample individuals.

This new measure could then be used to personalize the interactive experience based on this trait, such that if a version performs well (or poorly) for people with a particular score on the trait, then use (or don’t use) that version for people with similar scores.

But why involve the trait at all? Why not just personalize the interactive experience based on the responses of similar others? Since the new measure of the trait is just based on the available behavioral, demographic, and other logged data, one could simply predict responses based on those measure. Put in geometric terms, if the goal is to project the effects of different message onto available log data, why should one project the questionnaire measure of the trait onto the available log data and then project the effects onto this projection? This seems especially unappealing if one doesn’t fully trust the questionnaire measure to be accurate or one can’t be sure about which the set of all the traits that make a (substantial) difference.

I find this argument quite intuitively appealing, and it seems to resonate with others.1 But I think there are some reasons the recipe above could still be appealing.

One way to think about this recipe is as dimensionality reduction guided by theory about psychological traits. Available log data can often be used to construct countless predictors (or “features”, as the machine learning people call them). So one can very quickly get into a situation where the effective number of parameters for a full model predicting the effects of different messages is very large and will make for poor predictions. Nothing — no, not penalized regression, not even a support vector machine — makes this problem go away. Instead, one has to rely on the domain knowledge of the person constructing the predictors (i.e., doing the “feature engineering”) to pick some good ones.

So the tentative rejoinder is this: established psychological traits might often make good dimensions to predict effects of different version of a message, intervention, or experience with. And they may “come with” suggestions about what kinds of log data might serve as measures of them. They would be expected to be reusable across settings. Thus, I think this recipe is nonetheless deserves serious attention.

- I owe some clarity on this to some conversations with Mike Nowak and Maurits Kaptein. [↩]

Applying social psychology

Some reflections on how “quantitative” social psychology is and how this matters for its application to design and decision-making — especially in industries touched by the Internet.

In many ways, contemporary social psychology is dogmatically quantitative. Investigators run experiments, measure quantitative outcomes (even coding free responses to make them amenable to analysis), and use statistics to characterize the collected data. On the other hand, social psychology’s processes of stating and integrating its conclusions remain largely qualitative. Many hypotheses in social psychology state that some factor affects a process or outcome in one direction (i.e., “call” either beta > 0 or beta < 0). Reviews of research in social psychology often start with a simple effect and then note how many other variables moderate this effect. This is all quite fitting with the dominance of null-hypothesis significance testing (NHST) in much of psychology: rather than producing point estimates or confidence intervals for causal effects, it is enough to simply see how likely the observed data is given there there is no effect.1 Of course, there have been many efforts to change this. Many journals require reporting effect sizes. This is a good thing, but these effect sizes are rarely predicted by social psychological theory. Rather, they are reported to aid judgments of whether a finding is not only statistically significant but substantively or practically significant, and the theory predicts the direction of the effect. Not only is this process of reporting and combining results not quantitative in many ways, but it requires substantial inference from the particular settings of conducted experiments to the present settings. This actually helps to make sense of the practices described above: many social psychology experiments are conducted in conditions and with populations that are so different from those in which people would like to apply the resulting theories, that expecting consistency of effect sizes is implausible.2 This is not to say that these studies cannot tell us a good deal about how people will behave in many circumstances. It's just that figuring out what they predict and whether these predictions are reliable is a very messy, qualitative process. Thus, when it comes to making decisions -- about a policy, intervention, or service -- based on social-psychological research, this process is largely qualitative. Decision-makers can ask, which effects are in play? What is their direction? With interventions and measurement that are very likely different from the present case, how large were the effects?3

Sometimes this is the best that social science can provide. And such answers can be quite useful in design. The results of psychology experiments can often be very effective when used generatively. For example, designers can use taxonomies of persuasive strategies to dream up some ways of producing desired behavior change.

Nonetheless, I think all this can be contrasted with some alternative practices that are both more quantitative and require less of this uneasy generalization. First, social scientists can give much more attention to point estimates of parameters. While not without its (other) flaws, the economics literature on financial returns to education has aimed to provide, criticize, and refine estimates of just how much wages increase (on average) with more education.4

Second, researchers can avoid much of the messiest kinds of generalization altogether. Within the Internet industry, product optimization experiments are ubiquitous. Google, Yahoo, Facebook, Microsoft, and many others are running hundreds to thousands of simultaneous experiments with parts of their services. This greatly simplifies generalization: the exact intervention under consideration has just been tried with a random sample from the very population it will be applied to. If someone wants to tweak the intervention, just try it again before launching. This process still involves human judgment about how to react to these results.5 An even more extreme alternative is when machine learning is used to fine-tune, e.g., recommendations without direct involvement (or understanding) by humans.

So am I saying that social psychology — at least as an enterprise that is useful to designers and decision-makers — is going to be replaced by simple “bake-off” experiments and machine learning? Not quite. Unlike product managers at Google, many decision-makers don’t have the ability to cheaply test a proposed intervention on their population of interest.6 Even at Google, many changes (or new products) under consideration are too difficult to build to them all: one has to decide among an overabundance of options before the most directly applicable data could be available. This is consistent with my note above that social-psychological findings can make excellent inspiration during idea generation and early evaluation.

- To parrot Andrew Gelman, in social phenomena, everything affects everything else. There are no betas that are exactly zero. [↩]

- It's also often implausible that the direction of the effect must be preserved. [↩]

- Major figures in social psychology, such as Lee Ross, have worked on trying to better anticipate the effects of social interventions from theory. It isn’t easy. [↩]

- The diversity of the manipulations used by social psychologists ostensibly studying the same thing can make this more difficult. [↩]

- Generalization is not avoided. In particular, decision-makers often have to consider what would happen if an intervention tested with 1% of the population is launched for the whole population. There are all kinds of issues relating to peer influence, network effects, congestion, etc., here that don’t allow for simple extrapolation from the treatment effects identified by the experiment. Nonetheless, these challenges obviously apply to most research that aims to predict the effects of causes. [↩]

- However, Internet services play a more and more central role in many parts of our life, so this doesn’t just have to be limited to the Internet industry itself. [↩]

Ideas behind their time: formal causal inference?

Alex Tabarrok at Marginal Revolution blogs about how some ideas seem notably behind their time:

We are all familiar with ideas said to be ahead of their time, Babbage’s analytical engine and da Vinci’s helicopter are classic examples. We are also familiar with ideas “of their time,” ideas that were “in the air” and thus were often simultaneously discovered such as the telephone, calculus, evolution, and color photography. What is less commented on is the third possibility, ideas that could have been discovered much earlier but which were not, ideas behind their time.

In comparing ideas behind and ahead of their times, it’s worth considering the processes that identify them as such.

In the case of ideas ahead of their time, we rely on records and other evidence of their genesis (e.g., accounts of the use of flamethrowers at sea by the Byzantines ). Later users and re-discoverers of these ideas are then in a position to marvel at their early genesis. In trying to see whether some idea qualifies as ahead of its time, this early genesis, lack or use or underuse, followed by extensive use and development together serve as evidence for “ahead of its time” status.

On the other hand, in identifying ideas behind their time, it seems that we need different sorts of evidence. Taborrok uses the standard of whether their fruits could have been produced a long time earlier (“A lot of the papers in say experimental social psychology published today could have been written a thousand years ago so psychology is behind its time”). We need evidence that people in a previous time had all the intellectual resources to generate and see the use of the idea. Perhaps this makes identifying ideas behind their time harder or more contentious.

Y(X = x) and P(Y | do(x))

Perhaps formal causal inference — and some kind of corresponding new notation, such as Pearl’s do(x) operator or potential outcomes — is an idea behind its time.1 Judea Pearl’s account of the history of structural equation modeling seems to suggest just this: exactly what the early developers of path models (Wright, Haavelmo, Simon) needed was new notation that would have allowed them to distinguish what they were doing (making causal claims with their models) from what others were already doing (making statistical claims).2

In fact, in his recent talk at Stanford, Pearl suggested just this — that if the, say, the equality operator = had been replaced with some kind of assignment operator (say, :=), formal causal inference might have developed much earlier. We might be a lot further along in social science and applied evaluation of interventions if this had happened.

This example raises some questions about the criterion for ideas behind their time that “people in a previous time had all the intellectual resources to generate and see the use of the idea” (above). Pearl is a computer scientist by training and credits this background with his approach to causality as a problem of getting the formal language right — or moving between multiple formal languages. So we may owe this recent development to comfort with creating and evaluating the qualities of formal languages for practical purposes — a comfort found among computer scientists. Of course, e.g., philosophers and logicians also have been long comfortable with generating new formalisms. I think of Frege here.

So I’m not sure whether formal causal inference is an idea behind its time (or, if so, how far behind). But I’m glad we have it now.

- There is a “lively” debate about the relative value of these formalisms. For many of the dense causal models applicable to the social sciences (everything is potentially a confounder), potential outcomes seem like a good fit. But they can become awkward as the causal models get complex, with many exclusion restrictions (i.e. missing edges). [↩]

- See chapter 5 of Pearl, J. (2009). Causality: Models, Reasoning and Inference. 2nd Ed. Cambridge University Press. [↩]