Against between-subjects experiments

A less widely known reason for using within-subjects experimental designs in psychological science. In a within-subjects experiment, each participant experiences multiple conditions (say, multiple persuasive messages), while in a between-subjects experiment, each participant experiences only one condition.

If you ask a random social psychologist, “Why would you run a within-subjects experiment instead of a between-subjects experiments?”, the most likely answer is “power” — within-subjects experiments provide more power. That is, with the same number of participants, within-subjects experiments allow investigators to more easily tell that observed differences between conditions are not due to chance.1

Why do within-subjects experiments increase power? Because responses by the same individual are generally dependent; more specifically, they are often positively correlated. Say an experiment involves evaluating products, people, or policy proposals under different conditions, such as the presence of different persuasive cues or following different primes. It is often the case that participants who rate an item high on a scale under one condition will rate other items high on that scale under other condition. Or participants with short response times for one task will have relatively short response times for another task. Et cetera. This positive association might be due to stable characteristics of people or transient differences such as mood. Thus, the increase in power is due to heterogeneity in how individuals respond to the stimuli.

However, this advantage of within-subjects designs is frequently overridden in social psychology by the appeal of between-subjects designs. The latter are widely regarded as “cleaner” as they avoid carryover effects — in which one condition may effect responses to subsequent conditions experienced by the same participant. They can also be difficult to design when studies involve deception — even just deception about the purpose of the study — and one-shot encounters. Because of this, between-subjects designs are much more common in social psychology than within-subjects designs: investigators don’t regard the complexity of conducting within-subjects designs as worth it for the gain in power, which they regard as the primary advantage of within-subjects designs.

I want to point out another — but related — reason for using within-subjects designs: between-subjects experiments often do not allow consistent estimation of the parameters of interest. Now, between-subjects designs are great for estimating average treatment effects (ATEs), and ATEs can certainly be of great interest. For example, if one is interested how a design change to a web site will effect sales, an ATE estimated from an A-B test with the very same population will be useful. But this isn’t enough for psychological science for two reasons. First, social psychology experiments are usually very different from the circumstances of potential application: the participants are undergraduate students in psychology and the manipulations and situations are not realistic. So the ATE from a psychology experiment might not say much about the ATE for a real intervention. Second, social psychologists regard themselves as building and testing theories about psychological processes. By their nature, psychological processes occur within individuals. So an ATE won’t do — in fact, it can be a substantially biased estimate of the psychological parameter of interest.

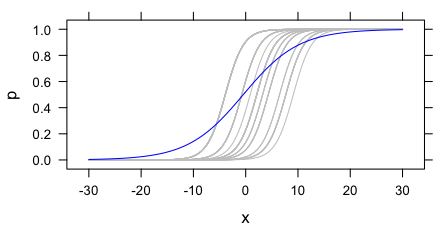

To illustrate this problem, consider an example where the outcome of an experiment is whether the participant says that a job candidate should be hired. For simplicity, let’s say this is a binary outcome: either they say to hire them or not. Their judgements might depend on some discrete scalar X. Different participants may have different thresholds for hiring the applicant, but otherwise be effected by X in the same way. In a logistic model, that is, each participant has their own intercept but all the slopes are the same. This is depicted with the grey curves below.2

These grey curves can be estimated if one has multiple observations per participant at different values of X. However, in a between-subjects experiment, this is not the case. As an estimate of a parameter of the psychological process common to all the participants, the estimated slope from a between-subjects experiment will be biased. This is clear in the figure above: the blue curve (the marginal expectation function) is shallower than any of the individual curves.

More generally, between-subjects experiments are good for estimating ATEs and making striking demonstrations. But they are often insufficient for investigating psychological processes since any heterogeneity — even only in intercepts — produces biased estimates of the parameters of psychological processes, including parameters that are universal in the population.

I see this as a strong motivation for doing more within-subjects experiments in social psychology. Unlike the power motivation for within-subjects designs, this isn’t solved by getting a larger sample of individuals. Instead, investigators need to think carefully about whether their experiments estimate any quantity of interest when there is substantial heterogeneity — as there generally is.3

- And to more precisely estimate these differences. Though social psychologist often don’t care about estimation, since many social psychological theories are only directional. [↩]

- This example is very directly inspired by Alan Agresti’s Categorical Data Analysis, p. 500. [↩]

- The situation is made a bit “better” by the fact that social psychologists are often only concerned with determining the direction of effects, so maybe aren’t worried that their estimates of parameters are biased. Of course, this is a problem in itself if the direction of the effect varies by individual. Here I have only treated the simpler case of universal function subject to a random shift. [↩]

For related points, see:

Hutchinson, J. Wesley, Wagner A. Kamakura, and John G. Lynch, Jr., (2000) “Unobserved Heterogeneity As An Alternative Explanation for ‘Reversal’ Effects in Behavioral Research.” Journal of Consumer Research, 27 (December), 323-344.

http://leeds.colorado.edu/asset/publication/lynch11.pdf

Abstract:

ABSTRACT

Behavioral researchers use analysis of variance (ANOVA) tests of differences between treatment means or chi-square tests of differences between proportions to provide support for empirical hypotheses about consumer behavior. These tests are typically conducted on data from “between-subjects” experiments in which participants were randomly assigned to conditions. We show that, despite using internally valid experimental designs such as this, aggregation biases can arise in which the theoretically critical pattern holds in the aggregate even though it holds for no (or few) individuals. First, we show that crossover interactions — often taken as strong evidence of moderating variables — can arise from the aggregation of two or more segments that that do not exhibit such interactions when considered separately. Second, we show that certain context effects that have been reported for choice problems can result from the aggregation of two (or more) segments that do not exhibit these effects when considered separately. Given these threats to the conclusions drawn from experimental results, we describe the conditions under which unobserved heterogeneity can be ruled out as an alternative explanation based on one or more of the following: a priori considerations, derived properties, diagnostic statistics, and the results of latent class modeling. When these tests cannot rule out explanations based on unobserved heterogeneity, this is a serious problem for theorists who assume implicitly that the same theoretical principle works equally for everyone, but for random error. The empirical data patterns revealed by our diagnostics can expose the weakness in the theory but not fix it. It remains for the researcher to do further work to understand the underlying constructs that drive heterogeneity effects, and to revise theory accordingly.

Thanks for the pointer. I like the illustration with interaction effects. This is much more striking than my illustration, in which only the magnitude of the parameter is wrong (and so many social psych theories only make directional predictions anyway).

And I’m glad to see this point somewhere in the social psych literature. (And happy to see you’re busy disabusing social psychologists of the idea that causal inference about “mediators” is as easy as they seem to have thought it was.)

I was going to write more about this, as I think this is a neglected problem. As I point out here, even heterogeneity in intercepts is enough to bias estimates of a psychological parameter that is homogeneous. I would be fascinated by any further thoughts you have along these lines.